这是对上一版部署的优化

上次使用的是docker,但cephadm其实默认使用的是podman,只配docker没用,podman就很容易在拉取镜像时卡住

这次尝试了docker/podman+镜像加速/代理,还是使用了podman+镜像加速的方式

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

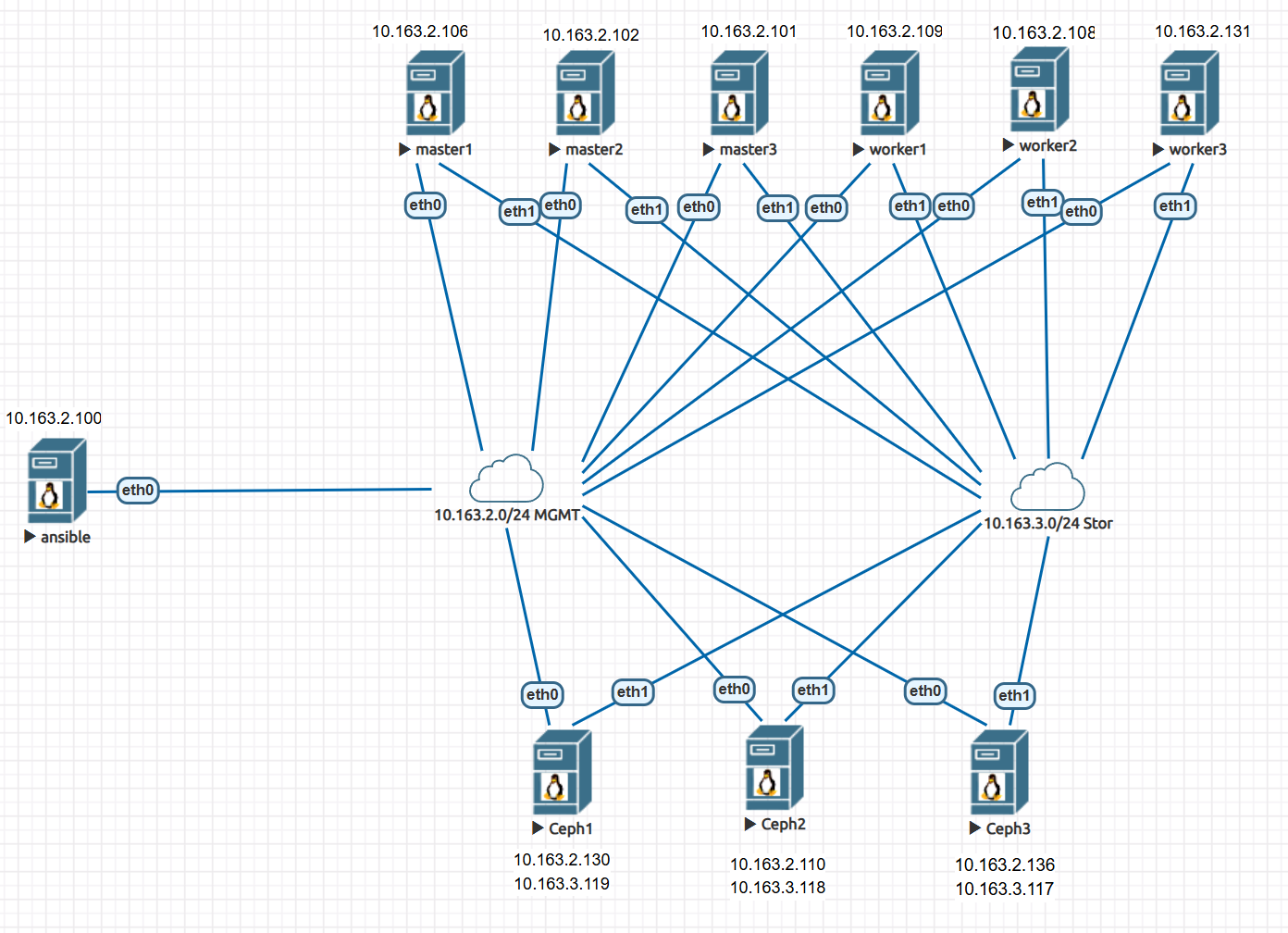

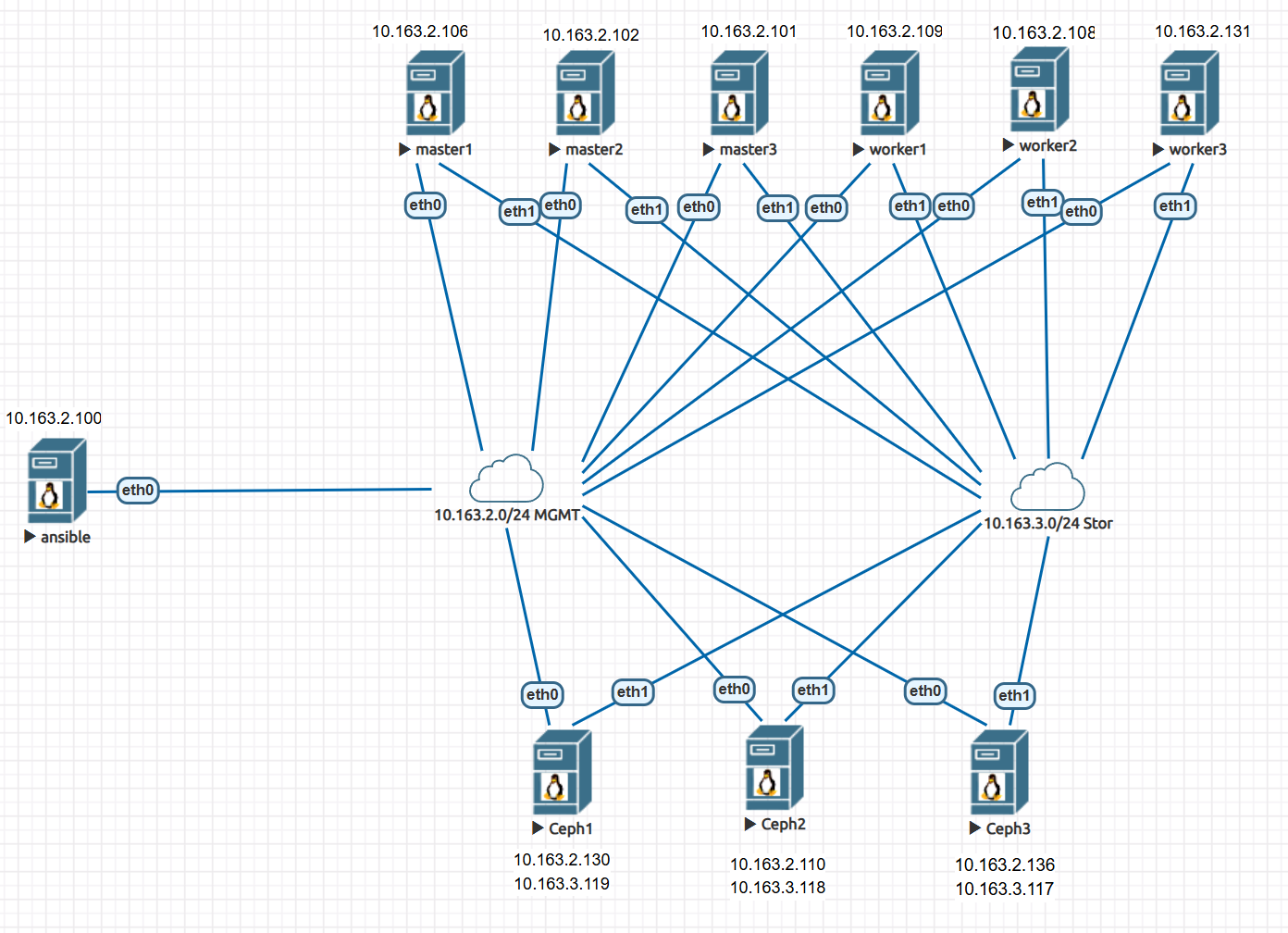

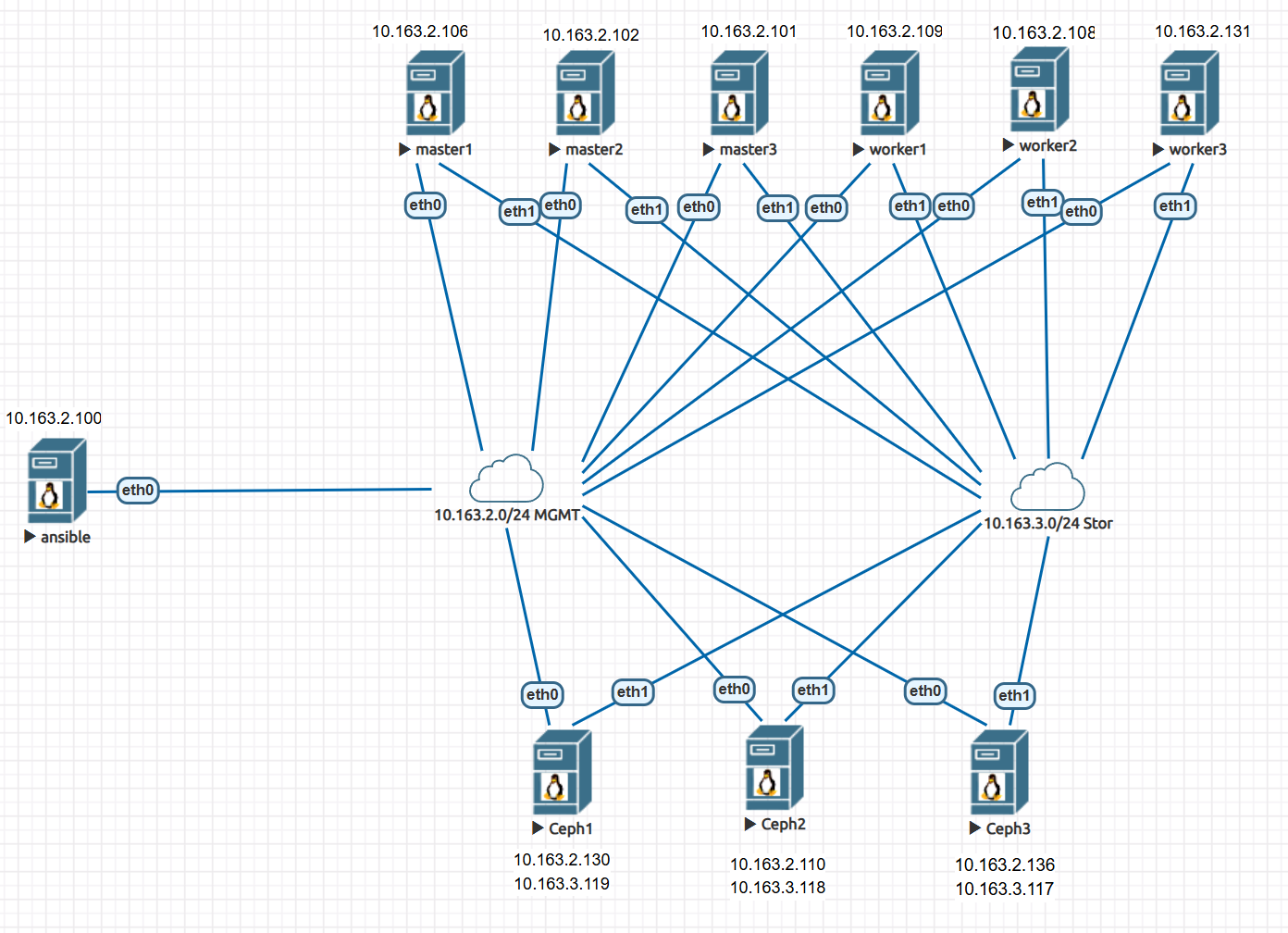

| 10.163.2.100 ansible (Rocky 8.10)

10.163.2.130 10.163.3.119 ceph1 (Rocky 8.10)

10.163.2.110 10.163.3.118 ceph2

10.163.2.136 10.163.3.117 ceph3

ceph v17.2.7 quincy

cat > /etc/hosts <<EOF

10.163.2.100 ansible

10.163.2.130 ceph1

10.163.2.110 ceph2

10.163.2.136 ceph3

EOF

yum -y install epel-release

yum -y install python3 ansible

git clone https://github.com/ceph/cephadm-ansible.git

cd cephadm-ansible

cat > registries.conf <<EOF

unqualified-search-registries = ["docker.io","quay.io"]

[[registry]]

prefix = "docker.io"

location = "a88uijg4.mirror.aliyuncs.com"

insecure = true

[[registry.mirror]]

location = "docker.lmirror.top"

[[registry.mirror]]

location = "docker.m.daocloud.io"

[[registry.mirror]]

location = "hub.uuuadc.top"

[[registry.mirror]]

location = "docker.anyhub.us.kg"

[[registry.mirror]]

location = "dockerhub.jobcher.com"

[[registry.mirror]]

location = "dockerhub.icu"

[[registry]]

prefix = "quay.io"

location = "quay.lmirror.top"

[[registry.mirror]]

location = "quay.m.daocloud.io"

EOF

cat > inventory <<EOF

[admin]

ansible ansible_host=10.163.2.100

[storage]

ceph1 ansible_host=10.163.2.130

ceph2 ansible_host=10.163.2.110

ceph3 ansible_host=10.163.2.136

[all:vars]

ansible_password=1

ansible_user=root

EOF

sed -i 's/^ceph_release.*/ceph_release: 17.2.7/g' ceph_defaults/defaults/main.yml

sed -i 's#^ceph_mirror.*#ceph_mirror: https://mirrors.aliyun.com/ceph#g' ceph_defaults/defaults/main.yml

sed -i 's#^ceph_stable_key.*#ceph_stable_key: https://mirrors.aliyun.com/ceph/keys/release.asc#g' ceph_defaults/defaults/main.yml

ansible -i inventory all -m shell -a "hostnamectl set-hostname {{ inventory_hostname }}" -b

ansible -i inventory all -m yum -a 'name=bash-completion,epel-release state=present'

ansible-playbook -i inventory cephadm-preflight.yml

ansible -i inventory all -m copy -a 'src=./registries.conf dest=/etc/containers/registries.conf'

curl --silent --remote-name --location https://github.com/ceph/ceph/raw/quincy/src/cephadm/cephadm

chmod +x cephadm

mv cephadm /usr/local/bin/

export http_proxy=http://192.168.10.238:7897

export https_proxy=http://192.168.10.238:7897

cephadm bootstrap --mon-ip=10.163.2.100 \

--cluster-network 10.163.3.0/24 \

--initial-dashboard-user=admin \

--initial-dashboard-password=wangsheng \

--dashboard-password-noupdate --allow-fqdn-hostname \

--allow-overwrite

ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph1

ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph2

ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph3

ceph orch host add ceph1 10.163.2.130

ceph orch host add ceph2 10.163.2.110

ceph orch host add ceph3 10.163.2.136

ceph orch apply mon ceph1,ceph2,ceph3

ceph orch apply mgr --placement "ceph1 ceph2 ceph3"

cat > /etc/ceph/osd.yaml <<EOF

service_type: osd

service_id: default_group

placement:

hosts:

- ceph1

- ceph2

- ceph3

data_devices:

paths:

- /dev/sdb

- /dev/sdc

- /dev/sdd

EOF

ceph orch apply -i /etc/ceph/osd.yaml

ceph orch ls

NAME PORTS RUNNING REFRESHED AGE PLACEMENT

alertmanager ?:9093,9094 1/1 39s ago 19m count:1

crash 4/4 4m ago 19m *

grafana ?:3000 0/1 - 19m count:1

mgr 3/3 4m ago 6m ceph1;ceph2;ceph3

mon 3/3 4m ago 15m ceph1;ceph2;ceph3

node-exporter ?:9100 4/4 4m ago 19m *

osd.default_group 9 4m ago 6m ceph1;ceph2;ceph3

prometheus ?:9095 1/1 39s ago 19m count:1

ceph config set global cluster_network "10.163.3.0/24"

...

|