github地址

https://github.com/ceph/ceph-csi/tree/release-v3.9

可以通过github查看其支持的版本

ceph版本选择v3.9,因为我的k8s集群是v1.27

参考文档https://github.com/ceph/ceph-csi/blob/release-v3.9/docs/deploy-rbd.md

- 准备 Ceph 配置和密钥:将你的 Ceph 集群信息(monitor 地址、keyring)转换为 Kubernetes 可用的 Secret 和 ConfigMap。

- 部署 RBAC 资源:为 Ceph-CSI 组件创建必要的服务账户、角色和绑定,以确保其拥有在 Kubernetes 中运行所需的权限。

- 部署 CSI 插件:部署 CSI 驱动本身的 Deployment 和 DaemonSet(包括

csi-provisioner, csi-rbdplugin 等 sidecar 容器)。

- 创建 StorageClass:定义一个使用刚部署的 Ceph-CSI 驱动的存储类,供后续创建 PVC 使用。

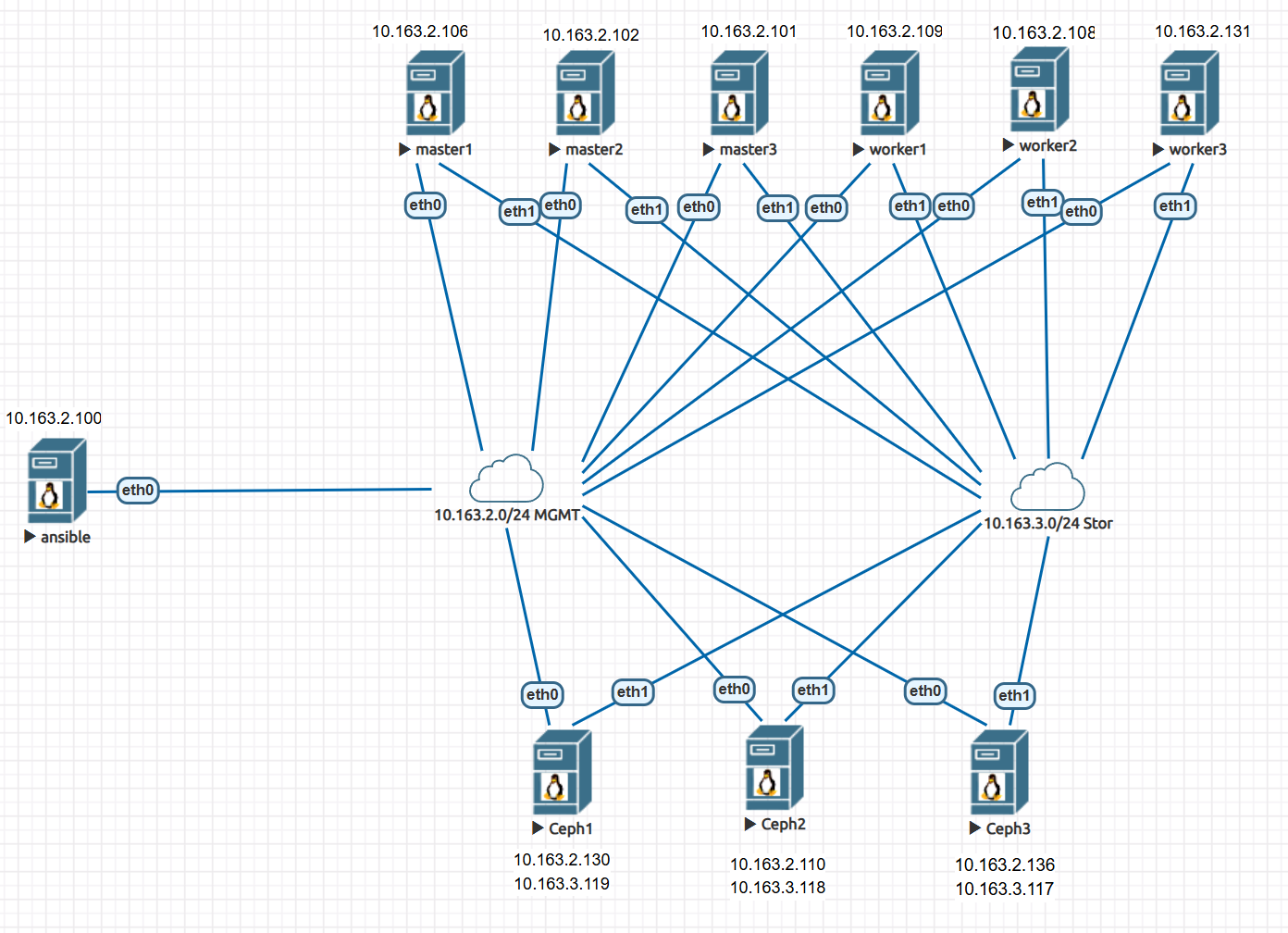

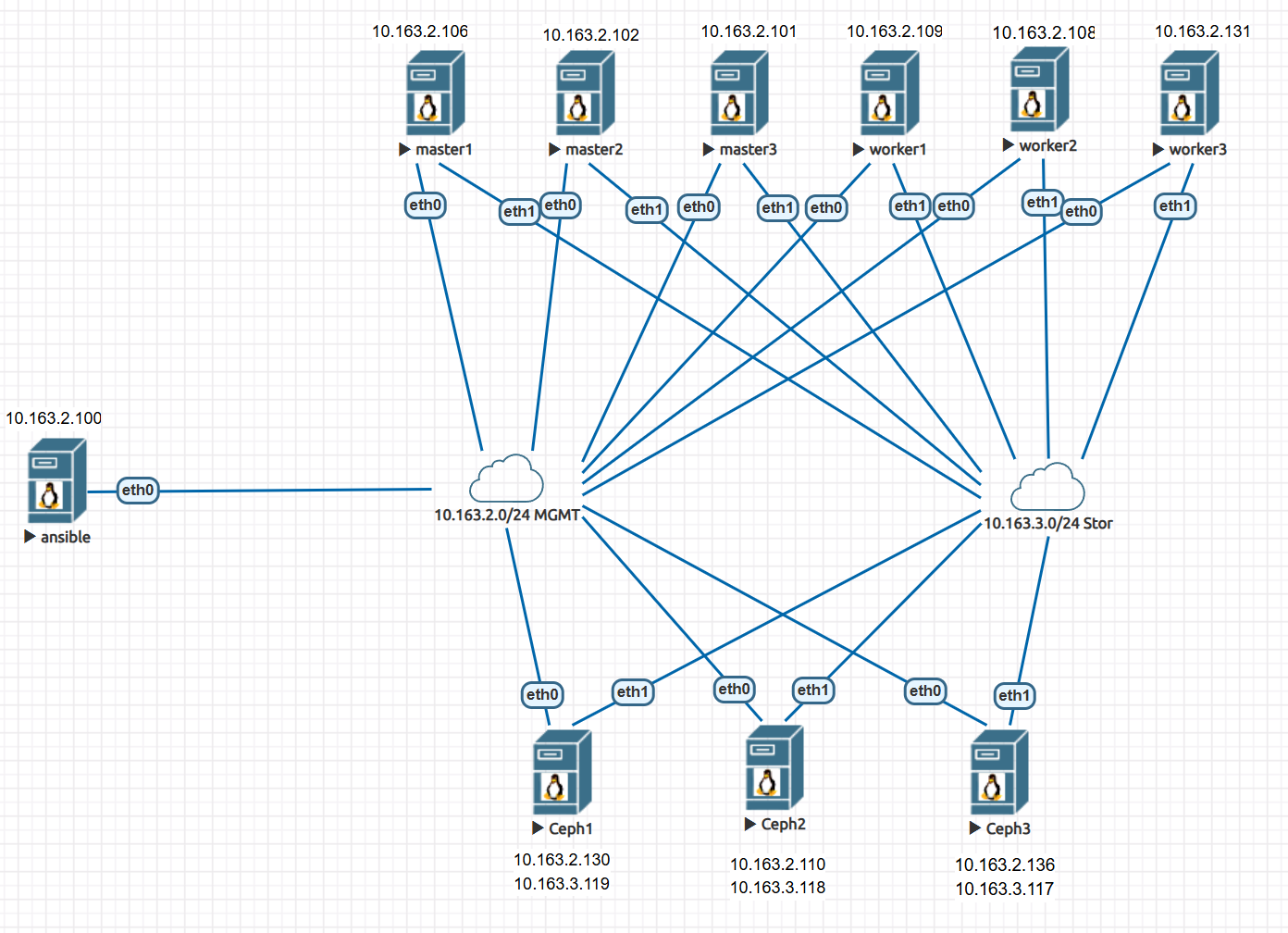

现在k8s和ceph集群都已经准备完了

准备ceph

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

|

ceph osd pool create k8s-main 64 64

ceph osd pool create k8s-high-perf 64 64

ceph osd pool create k8s-low-perf 64 64

rbd pool init k8s-main

rbd pool init k8s-high-perf

rbd pool init k8s-low-perf

ceph osd pool application enable k8s-main rbd

ceph osd pool application enable k8s-high-perf rbd

ceph osd pool application enable k8s-low-perf rbd

其实可以在这里对三个池子用pg分一下osd的,略过了

ceph auth get-or-create client.k8s \

mon 'profile rbd' mgr 'allow r' \

osd 'allow rwx pool=k8s-main, allow rwx pool=k8s-high-perf, allow rwx pool=k8s-low-perf' \

-o /etc/ceph/ceph.client.k8s.keyring

cat /etc/ceph/ceph.client.k8s.keyring

[client.k8s]

key = AQBT0cdoenFlCRAADbBKxKAPfBEq2uF5GTx3eg==

|

部署Ceph-CSI

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

|

curl -LO https://dl.k8s.io/release/v1.27.0/bin/linux/amd64/kubectl

chmod +x kubectl

cp kubectl /usr/bin/

mkdir /root/.kube

scp master1:~/.kube/config ~/.kube/config

echo "10.163.2.150 lb-apiserver.kubernetes.local" >> /etc/hosts

git clone -b release-v3.9 https://github.com/ceph/ceph-csi.git

cd ceph-csi/deploy/rbd/kubernetes/

cat > csi-config-map.yaml <<EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: ceph-csi-config

data:

config.json: |-

[

{

"clusterID": "e8c93664-91ed-11f0-b06e-500000010000",

"monitors": [

"10.163.2.136:6789",

"10.163.2.130:6789",

"10.163.2.110:6789"

]

}

]

EOF

kubectl apply -f csi-config-map.yaml

cat > csi-rbd-secret.yaml <<EOF

apiVersion: v1

kind: Secret

metadata:

name: csi-rbd-secret

namespace: default

stringData:

userID: k8s

userKey: AQBT0cdoenFlCRAADbBKxKAPfBEq2uF5GTx3eg==

EOF

kubectl apply -f csi-rbd-secret.yaml

cat > csi-kms-config-map.yaml <<EOF

---

apiVersion: v1

kind: ConfigMap

data:

config.json: |-

{}

metadata:

name: ceph-csi-encryption-kms-config

EOF

kubectl apply -f csi-kms-config-map.yaml

kubectl apply -f csidriver.yaml

kubectl apply -f csi-provisioner-rbac.yaml

kubectl apply -f csi-nodeplugin-rbac.yaml

kubectl apply -f csi-rbdplugin-provisioner.yaml

kubectl apply -f csi-rbdplugin.yaml

kubectl get pods

NAME READY STATUS RESTARTS AGE

csi-rbdplugin-69fs7 3/3 Running 0 56s

csi-rbdplugin-6nhp4 3/3 Running 0 49s

csi-rbdplugin-blzjf 3/3 Running 0 46s

csi-rbdplugin-dd8t9 3/3 Running 0 51s

csi-rbdplugin-jvkr2 3/3 Running 0 59s

csi-rbdplugin-provisioner-869d9d7f59-8nb9k 7/7 Running 0 56s

csi-rbdplugin-provisioner-869d9d7f59-gvz4x 7/7 Running 0 52s

csi-rbdplugin-provisioner-869d9d7f59-vlkxn 7/7 Running 0 49s

csi-rbdplugin-s59bl 3/3 Running 0 54s

cat > storageclass.yaml <<EOF

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-rbd-sc

provisioner: rbd.csi.ceph.com

parameters:

clusterID: e8c93664-91ed-11f0-b06e-500000010000

pool: k8s-main

imageFeatures: layering

csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret

csi.storage.k8s.io/provisioner-secret-namespace: default

csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret

csi.storage.k8s.io/node-stage-secret-namespace: default

csi.storage.k8s.io/controller-expand-secret-name: csi-rbd-secret

csi.storage.k8s.io/controller-expand-secret-namespace: default

csi.storage.k8s.io/node-expand-secret-name: csi-rbd-secret

csi.storage.k8s.io/node-expand-secret-namespace: default

allowVolumeExpansion: true

reclaimPolicy: Retain

mountOptions:

- discard

EOF

kubectl apply -f storageclass.yaml

kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

csi-rbd-sc rbd.csi.ceph.com Retain Immediate true 3s

|

测试存储类

让AI帮我生成了个statefulset的资源文件,这样可以直接测试卷申请模板能不能用

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

| cat > test_statefulset.yaml <<EOF

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: ceph-rbd-test

namespace: default

spec:

serviceName: "ceph-rbd-test"

replicas: 2

selector:

matchLabels:

app: ceph-rbd-test

template:

metadata:

labels:

app: ceph-rbd-test

spec:

containers:

- name: test-container

image: alpine:latest

command: ["/bin/sh", "-c"]

args:

- |

echo "Pod $(hostname) started at $(date)" > /data/pod-info.txt;

echo "Testing write operation..." >> /data/pod-info.txt;

while true; do sleep 3600; done

volumeMounts:

- name: data

mountPath: /data

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "csi-rbd-sc"

resources:

requests:

storage: 1Gi

EOF

kubectl apply -f test_statefulset.yaml

kubectl get pods

NAME READY STATUS RESTARTS AGE

ceph-rbd-test-0 1/1 Running 0 6m26s

ceph-rbd-test-1 1/1 Running 0 5m33s

kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

data-ceph-rbd-test-0 Bound pvc-67d3a05c-271a-4567-b429-be47f7de3cec 1Gi RWO csi-rbd-sc 42m

data-ceph-rbd-test-1 Bound pvc-0b486d04-5ea9-4eb5-972e-05c2a001b043 1Gi RWO csi-rbd-sc 5m43s

kubectl exec -it ceph-rbd-test-0 -- cat /data/pod-info.txt

Pod ansible started at Mon Sep 15 07:43:13 PM CST 2025

Testing write operation...

可以看到成功创建了,并且存储也已经写入了内容

|