如果出现无法载图的情况,请检查与github的连通性

网络环境说明 ceph的部署参见《ceph分布式存储》博文

192.168.10.121 ws-k8s-master1

rbd作为块存储直接给k8s主机使用 云超融合节点做ceph后生成rbd,将rbd作为磁盘提供给k8s节点

k8s直接对接ceph-rbd 注:如果rbd被一个pod挂载,就无法被另外pod挂载;

准备工作 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 1.k8s节点要安装ceph-common 把ceph.repo复制到各个节点,然后yum安装 scp /etc/yum.repos.d/ceph.repo ws-k8s-master1:/etc/yum.repos.d/ ... yum -y install ceph-common 2.将ceph-node1的ceph配置文件复制到各节点 scp /etc/ceph/* ws-k8s-master1:/etc/ceph/ ... 3.创建一个ceph的rbd池 ceph osd pool create k8s-rbd 128 ceph osd pool application enable k8s-rbd rbd 4.基于rbd池创建卷 rbd create k8s-rbd/disk1 --size 10G rbd feature disable k8s-rbd/disk1 object-map fast-diff deep-flatten

进行挂载 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 cd ~mkdir cephcd ceph写一个pod用来挂载rbd cat > test-rbd.yaml << EOF apiVersion: v1 kind: Pod metadata: name: test-rbd spec: containers: - image: 192.168.10.130/wangsheng/solo:1.0 imagePullPolicy: IfNotPresent name: test-rbd volumeMounts: - name: rbd mountPath: /mnt volumes: - name: rbd rbd: monitors: - '192.168.10.141:6789' - '192.168.10.142:6789' - '192.168.10.143:6789' pool: k8s-rbd image: disk1 fsType: xfs readOnly: false user: admin keyring: /etc/ceph/ceph.client.admin.keyring EOF kubectl apply -f test-rbd.yaml kubectl describe pod test-rbd | grep -i volumes -A 10 Volumes: rbd: Type: RBD (a Rados Block Device mount on the host that shares a pod's lifetime) CephMonitors: [192.168.10.141:6789 192.168.10.142:6789 192.168.10.143:6789] RBDImage: disk1 FSType: xfs RBDPool: k8s-rbd RadosUser: admin Keyring: /etc/ceph/ceph.client.admin.keyring SecretRef: nil ReadOnly: false

基于ceph-rbd手动生成pv 解决rbd卷只能被单个pod挂载的问题。

基于ceph-rbd生成pv可以让多个pod对rbd上的空间进行挂载

但是,也存在rbd无法跨节点挂载的问题,仅对于同一个node下的pod有效,如果node挂了,pod迁移到另外的node上就会无法正常挂载。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 1.k8s节点安装ceph 2.获取ceph client.admin的keyring值,将其存储到 ceph auth get-key client.admin | base64 QVFDTEtmZGxESHN6Q1JBQWdnRXlZeHRodzJyY3htb1ZzTFVPb1E9PQ== 3.创建secret对象ceph-secret,这个secret对象用于k8s volume插件访问ceph集群 cat > ceph-secret.yaml << EOF apiVersion: v1 kind: Secret metadata: name: ceph-secret data: key: QVFDTEtmZGxESHN6Q1JBQWdnRXlZeHRodzJyY3htb1ZzTFVPb1E9PQ== EOF kubectl apply -f ceph-secret.yaml 4.从存储池中再创建一个卷disk2 rbd create k8s-rbd/disk2 --size 10G rbd feature disable k8s-rbd/disk2 object-map fast-diff deep-flatten 5.创建pv,指定使用disk2 cat > ceph-pv.yaml << EOF apiVersion: v1 kind: PersistentVolume metadata: name: ceph-pv # 持久卷的名称 spec: capacity: storage: 10Gi # 卷的存储容量为10GiB accessModes: - ReadWriteOnce # 卷的访问模式为单节点读写 rbd: monitors: - '192.168.10.141:6789' # RBD监视器的地址 - '192.168.10.142:6789' - '192.168.10.143:6789' pool: k8s-rbd # RBD池的名称 image: disk2 # RBD镜像的名称 user: admin # 用户名 secretRef: name: ceph-secret # 密钥的名称(引用的Secret对象) fsType: xfs # 文件系统类型为XFS readOnly: false # 是否只读 persistentVolumeReclaimPolicy: Recycle # 持久卷的回收策略为Recycle(废弃策略) EOF kubectl apply -f ceph-pv.yaml kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE ceph-pv 10Gi RWO Recycle Available 3m14s 6.创建pvc cat > ceph-pvc.yaml << EOF kind: PersistentVolumeClaim apiVersion: v1 metadata: name: ceph-pvc spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi EOF kubectl apply -f ceph-pvc.yaml kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE ceph-pvc Bound ceph-pv 10Gi RWO 6s 7.测试pod挂载pvc cat > pod-pvc.yaml << EOF apiVersion: apps/v1 kind: Deployment metadata: name: ceph-pvc-test spec: selector: matchLabels: app: solo replicas: 2 template: metadata: labels: app: solo spec: containers: - name: solo image: 192.168.10.130/wangsheng/solo:1.0 imagePullPolicy: IfNotPresent ports: - containerPort: 8080 volumeMounts: - mountPath: "/ceph-data" name: ceph-data volumes: - name: ceph-data persistentVolumeClaim: claimName: ceph-pvc EOF kubectl apply -f pod-pvc.yaml kubectl get pods | grep pvc ceph-pvc-test-76c79b74d9-m9vw6 1/1 Running 0 116s ceph-pvc-test-76c79b74d9-qkkzd 1/1 Running 0 116s

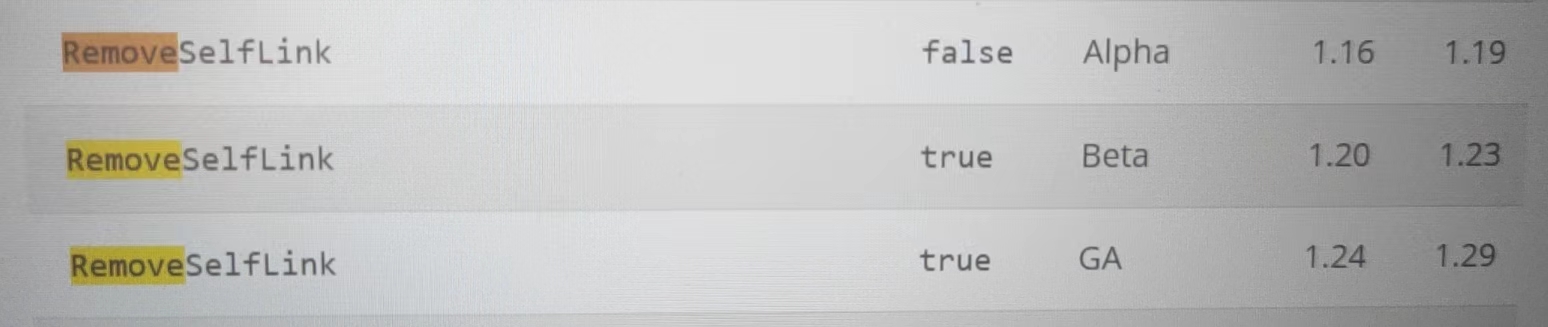

基于storageclass自动生成pv 注: 本实验无法使用k8s1.24及以上版本,因为在1.24之后,RemoveSelfLink选项被锁定为true无法修改

准备工作 1 2 3 4 5 6 使ceph节点和k8s节点的/etc/ceph具有满权限 创建/root/.ceph目录,将/etc/ceph内文件复制过去 chmod 777 -R /etc/ceph/*ssh ws-k8s-master2 "chmod 777 -R /etc/ceph/* && mkdir /root/.ceph && cp -ar /etc/ceph/ /root/.ceph/" ssh ceph-node1 "chmod 777 -R /etc/ceph/* && mkdir /root/.ceph && cp -ar /etc/ceph/ /root/.ceph/" ...

开始安装 创建rbd供应商provisioner

1.创建供应商 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: rbd-provisioner rules: - apiGroups: ["" ] resources: ["persistentvolumes" ] verbs: ["get" , "list" , "watch" , "create" , "delete" ] - apiGroups: ["" ] resources: ["persistentvolumeclaims" ] verbs: ["get" , "list" , "watch" , "update" ] - apiGroups: ["storage.k8s.io" ] resources: ["storageclasses" ] verbs: ["get" , "list" , "watch" ] - apiGroups: ["" ] resources: ["events" ] verbs: ["create" , "update" , "patch" ] - apiGroups: ["" ] resources: ["services" ] resourceNames: ["kube-dns" ,"coredns" ] verbs: ["list" , "get" ] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: rbd-provisioner subjects: - kind: ServiceAccount name: rbd-provisioner namespace: default roleRef: kind: ClusterRole name: rbd-provisioner apiGroup: rbac.authorization.k8s.io --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: rbd-provisioner rules: - apiGroups: ["" ] resources: ["secrets" ] verbs: ["get" ] - apiGroups: ["" ] resources: ["endpoints" ] verbs: ["get" , "list" , "watch" , "create" , "update" , "patch" ] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: rbd-provisioner roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: rbd-provisioner subjects: - kind: ServiceAccount name: rbd-provisioner namespace: default --- apiVersion: apps/v1 kind: Deployment metadata: name: rbd-provisioner spec: selector: matchLabels: app: rbd-provisioner replicas: 1 strategy: type : Recreate template: metadata: labels: app: rbd-provisioner spec: containers: - name: rbd-provisioner image: quay.io/xianchao/external_storage/rbd-provisioner:v1 imagePullPolicy: IfNotPresent env : - name: PROVISIONER_NAME value: ceph.com/rbd serviceAccount: rbd-provisioner --- apiVersion: v1 kind: ServiceAccount metadata: name: rbd-provisioner kubectl apply -f rbd-provisioner.yaml

2.ceph直接创建一个pool池用来给k8s调用 1 2 ceph osd pool create k8s-storageclass 128 ceph osd pool application enable k8s-storageclass rbd

3.创建k8s的ceph-secret 1 2 3 4 mkdir -p ~/ceph/storageclasscd ~/ceph/storageclassecho -n "AQCLKfdlDHszCRAAggEyYxthw2rcxmoVsLUOoQ==" | base64 QVFDTEtmZGxESHN6Q1JBQWdnRXlZeHRodzJyY3htb1ZzTFVPb1E9PQ==

4.创建k8s的ceph-secret 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 mkdir -p ~/ceph/storageclasscd ~/ceph/storageclassecho -n "AQCLKfdlDHszCRAAggEyYxthw2rcxmoVsLUOoQ==" | base64 QVFDTEtmZGxESHN6Q1JBQWdnRXlZeHRodzJyY3htb1ZzTFVPb1E9PQ== cat > ceph-secret.yaml << EOF apiVersion: v1 kind: Secret metadata: name: ceph-storageclass-secret type: "ceph.com/rbd" #供应商名称 data: key: QVFDTEtmZGxESHN6Q1JBQWdnRXlZeHRodzJyY3htb1ZzTFVPb1E9PQ== EOF kubectl apply -f ceph-secret.yaml kubectl get secret NAME TYPE DATA AGE ceph-storageclass-secret ceph.com/rbd 1 4m46s

5.创建storageclass 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 cat > storageclass.yaml << EOF apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: k8s-rbd provisioner: ceph.com/rbd #供应商名 parameters: monitors: 192.168.10.141:6789,192.168.10.142:6789,192.168.10.143:6789 # 指定Ceph监视器的地址和端口 adminId: admin # Ceph管理员的标识符 adminSecretName: ceph-storageclass-secret # 存储Ceph管理员凭据的Kubernetes Secret的名称 pool: k8s-storageclass # Ceph存储池的名称 userId: admin # Ceph用户的标识符 userSecretName: ceph-storageclass-secret # 存储Ceph用户凭据的Kubernetes Secret的名称 fsType: xfs # 指定要在存储中使用的文件系统类型 imageFormat: "2" # 指定Ceph RBD映像的格式 imageFeatures: "layering" # 指定Ceph RBD映像的特性 EOF kubectl apply -f storageclass.yaml storageclass.storage.k8s.io/k8s-rbd created kubectl get storageclass NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE k8s-rbd ceph.com/rbd Delete Immediate false

6.用storageclass创建pvc 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 cat > storageclass-pvc.yaml << EOF kind: PersistentVolumeClaim apiVersion: v1 metadata: name: rbd-pvc spec: accessModes: - ReadWriteOnce volumeMode: Filesystem resources: requests: storage: 1Gi storageClassName: k8s-rbd EOF kubectl apply -f storageclass-pvc.yaml 如果pvc从storageclass存储类中找到供应商,从供应商对应的pool中取得空间做成pv 那么说明供应商是没有问题的

k8s挂载cephfs 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 1.找到ceph的admin用户的key cat /etc/ceph/ceph.client.admin.keyringAQCLKfdlDHszCRAAggEyYxthw2rcxmoVsLUOoQ== echo -n 'AQCLKfdlDHszCRAAggEyYxthw2rcxmoVsLUOoQ==' >> /etc/ceph/admin.secret2.通过这个key将ceph节点挂载到本机/data/ceph mkdir -p /data/cephmount -t ceph 192.168.10.141:6789:/ /data/ceph -o name=admin,secretfile=/etc/ceph/admin.secret 查看文件系统状态 df -Th | grep ceph192.168.10.141:6789:/ ceph 282G 0 282G 0% /data/ceph 3.创建secret echo -n 'AQCLKfdlDHszCRAAggEyYxthw2rcxmoVsLUOoQ==' | base64 **QVFDTEtmZGxESHN6Q1JBQWdnRXlZeHRodzJyY3htb1ZzTFVPb1E9PQ**== 创建cephfs-secret.yaml文件 cat > cephfs-secret.yaml << EOF apiVersion: v1 kind: Secret metadata: name: cephfs-secret data: key: **QVFDTEtmZGxESHN6Q1JBQWdnRXlZeHRodzJyY3htb1ZzTFVPb1E9PQ**== EOF kubectl apply -f cephfs-secret.yaml 4.创建pv和pvc,挂载到挂载进来的目录 cd /data/ceph/mkdir -p wangsheng && chmod 0777 wangshengcat > pv-pvc.yaml <<EOF kind: PersistentVolumeClaim apiVersion: v1 metadata: name: cephfs-pvc spec: accessModes: - ReadWriteMany volumeName: cephfs-pv resources: requests: storage: 10Gi --- apiVersion: v1 kind: PersistentVolume metadata: name: cephfs-pv spec: capacity: storage: 10Gi accessModes: - ReadWriteMany cephfs: monitors: - 192.168.10.141:6789 - 192.168.10.142:6789 - 192.168.10.143:6789 path: /wangsheng user: admin readOnly: false secretRef: name: cephfs-secret persistentVolumeReclaimPolicy: Recycle EOF kubectl apply -f pv-pvc.yaml kubectl get pv | grep ceph cephfs-pv 10Gi RWX Recycle Bound default/cephfs-pvc 117s 5.测试 创建两个pod cat > pod-test.yaml <<EOF apiVersion: v1 kind: Pod metadata: name: cephfs-pod-1 spec: containers: - image: nginx name: nginx imagePullPolicy: IfNotPresent volumeMounts: - name: test-v1 mountPath: /mnt volumes: - name: test-v1 persistentVolumeClaim: claimName: cephfs-pvc --- apiVersion: v1 kind: Pod metadata: name: cephfs-pod-2 spec: containers: - image: nginx name: nginx imagePullPolicy: IfNotPresent volumeMounts: - name: test-v1 mountPath: /mnt volumes: - name: test-v1 persistentVolumeClaim: claimName: cephfs-pvc EOF kubectl apply -f pod-test.yaml kubectl get pods 在两个pod都创建文件 kubectl exec -it cephfs-pod-1 -- /bin/sh cd /mnt touch 1kubectl exec -it cephfs-pod-2 -- /bin/sh cd /mnt touch 2查看,两个pod已经共享了一个pvc ls /data/ceph/wangsheng/1 2