如果出现无法载图的情况,请检查与github的连通性

注:本文较长,建议配合右侧目录食用

关于docker的详细基础可以看另外一篇博文,这里只介绍与k8s相关的部分,以及做一些补充

k8s搭建 k8s1.20搭建 环境

环境初始化 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 hostnamectl set-hostname ws-k8s-master1 && bash echo " 192.168.8.151 ws-k8s-master1 192.168.8.152 ws-k8s-node1 192.168.8.153 ws-k8s-node2 " >> /etc/hostsnmcli con modify ens33 ipv4.addresses 192.168.8.151/24 ipv4.gateway 192.168.8.2 ipv4.dns 192.168.8.2 ipv4.method manual nmcli con up ens33 sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config setenforce 0 systemctl disable firewalld.service --now yum -y update ssh-keygen ssh-copy-id ws-k8s-node1 ssh-copy-id ws-k8s-node2 swapoff -a sed -i '$ s/^/#/' /etc/fstab modprobe br_netfilter echo "modprobe br_netfilter" >> /etc/profile echo " net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 " >> /etc/sysctl.d/k8s.confsysctl -p /etc/sysctl.d/k8s.conf yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm echo " [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=0 " >> /etc/yum.repos.d/kubernetes.reposcp /etc/yum.repos.d/kubernetes.repo ws-k8s-node1:/etc/yum.repos.d/ scp /etc/yum.repos.d/kubernetes.repo ws-k8s-node2:/etc/yum.repos.d/ sed -i 's/^server/#server/g' /etc/chrony.conf sed -i '1s/^/server cn.pool.ntp.org iburst\n/' /etc/chrony.conf systemctl restart chronyd yum -y install docker-ce systemctl enable docker --now echo ' { "registry-mirrors": ["https://bsx9xf1d.mirror.aliyuncs.com"], "exec-opts": ["native.cgroupdriver=systemd"] } ' > /etc/docker/daemon.jsonsystemctl daemon-reload systemctl restart docker reboot hostnamectl set-hostname ws-k8s-node1 && bash echo " 192.168.8.151 ws-k8s-master1 192.168.8.152 ws-k8s-node1 192.168.8.153 ws-k8s-node2 " >> /etc/hostsnmcli con modify ens33 ipv4.addresses 192.168.8.152/24 ipv4.gateway 192.168.8.2 ipv4.dns 192.168.8.2 ipv4.method manual nmcli con up ens33 sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config setenforce 0 systemctl disable firewalld.service --now yum -y update yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm ssh-keygen ssh-copy-id ws-k8s-node2 ssh-copy-id ws-k8s-master1 swapoff -a sed -i '$ s/^/#/' /etc/fstab modprobe br_netfilter echo "modprobe br_netfilter" >> /etc/profileecho " net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 " >> /etc/sysctl.d/k8s.confsysctl -p /etc/sysctl.d/k8s.conf sed -i 's/^server/#server/g' /etc/chrony.conf sed -i '1s/^/server cn.pool.ntp.org iburst\n/' /etc/chrony.conf systemctl restart chronyd wget -O get-docker.sh https://get.docker.com sh get-docker.sh systemctl enable docker --now echo ' { "registry-mirrors": ["https://bsx9xf1d.mirror.aliyuncs.com"], "exec-opts": ["native.cgroupdriver=systemd"] } ' > /etc/docker/daemon.jsonsystemctl daemon-reload systemctl restart docker reboot hostnamectl set-hostname ws-k8s-node2 && bash echo " 192.168.8.151 ws-k8s-master1 192.168.8.152 ws-k8s-node1 192.168.8.153 ws-k8s-node2 " >> /etc/hostsnmcli con modify ens33 ipv4.addresses 192.168.8.153/24 ipv4.gateway 192.168.8.2 ipv4.dns 192.168.8.2 ipv4.method manual nmcli con up ens33 sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config setenforce 0 systemctl disable firewalld.service --now yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm yum -y update ssh-keygen ssh-copy-id ws-k8s-node1 ssh-copy-id ws-k8s-master1 swapoff -a sed -i '$ s/^/#/' /etc/fstab modprobe br_netfilter echo "modprobe br_netfilter" >> /etc/profileecho " net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 " >> /etc/sysctl.d/k8s.confsysctl -p /etc/sysctl.d/k8s.conf sed -i 's/^server/#server/g' /etc/chrony.conf sed -i '1s/^/server cn.pool.ntp.org iburst\n/' /etc/chrony.conf systemctl restart chronyd wget -O get-docker.sh https://get.docker.com sh get-docker.sh systemctl enable docker --now echo ' { "registry-mirrors": ["https://bsx9xf1d.mirror.aliyuncs.com"], "exec-opts": ["native.cgroupdriver=systemd"] } ' > /etc/docker/daemon.jsonsystemctl daemon-reload systemctl restart docker reboot

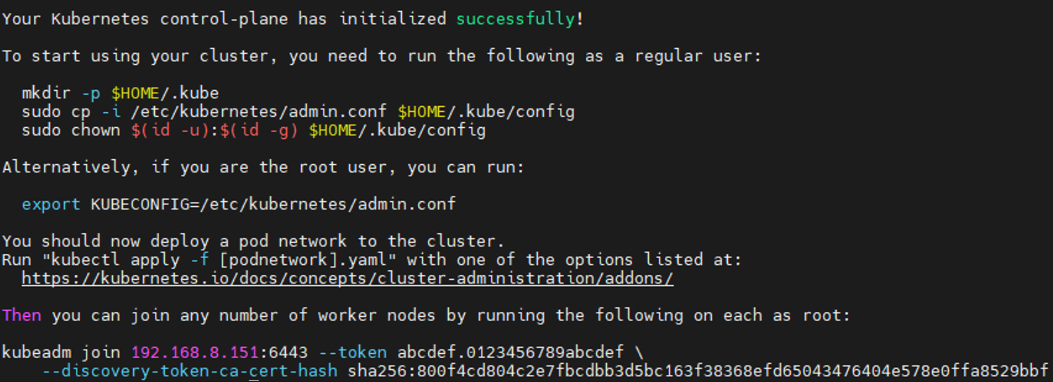

主节点安装k8s 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6 systemctl enable kubelet kubeadm config print init-defaults > kubeadm.yaml vim kubeadm.yaml advertiseAddress: 192.168.8.151 name: ws-k8s-master1 imageRepository: registry.aliyuncs.com/google_containers kubernetesVersion: v1.20.6 在networking中增加并对齐 podSubnet: 10.10.0.0/16 注释criSocket这一行 echo " --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration cgroupDriver: systemd " >> kubeadm.yamlkubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification mkdir -p $HOME /.kubesudo cp -i /etc/kubernetes/admin.conf $HOME /.kube/config sudo chown $(id -u):$(id -g) $HOME /.kube/config kubectl get nodes

工作节点添加 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 kubeadm token create --print-join-command yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6 systemctl enable kubelet kubeadm join 192.168.8.151:6443 --token j7k3oa.761wztev8dgrqv59 \ --discovery-token-ca-cert-hash \ sha256:800f4cd804c2e7fbcdbb3d5bc163f38368efd65043476404e578e0ffa8529bbf \ --ignore-preflight-errors=SystemVerification yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6 systemctl enable kubelet kubeadm join 192.168.8.151:6443 --token j7k3oa.761wztev8dgrqv59 \ --discovery-token-ca-cert-hash \ sha256:800f4cd804c2e7fbcdbb3d5bc163f38368efd65043476404e578e0ffa8529bbf \ --ignore-preflight-errors=SystemVerification kubectl get nodes NAME STATUS ROLES AGE VERSION ws-k8s-master1 NotReady control-plane,master 21m v1.20.6 ws-k8s-node1 NotReady <none> 98s v1.20.6 ws-k8s-node2 NotReady <none> 60s v1.20.6 kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-7f89b7bc75-6ntkb 0/1 Pending 0 12m coredns-7f89b7bc75-cph9t 0/1 Pending 0 12m etcd-ws-k8s-master1 1/1 Running 0 12m kube-apiserver-ws-k8s-master1 1/1 Running 1 12m kube-controller-manager-ws-k8s-master1 1/1 Running 0 12m kube-proxy-2sltc 1/1 Running 0 12m kube-scheduler-ws-k8s-master1 1/1 Running 0 12m

calico网络插件 Calico通过calico.yaml配置文件https://docs.projectcalico.org/manifests/calico.yaml

一些参数 CALICO_IPV4POOL_IPIP:是否启用IPIP模式,默认采用IPIP

IP_AUTODETECTION_METHOD:获取Node IP地址的方式,默认使用第1个网络接口的IP地址

name: IP_AUTODETECTION_METHOD

Calico组件 1、felix

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 kubectl get nodes NAME STATUS ROLES AGE VERSION ws-k8s-master1 NotReady control-plane,master 21m v1.20.6 ws-k8s-node1 NotReady <none> 98s v1.20.6 ws-k8s-node2 NotReady <none> 60s v1.20.6 kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-7f89b7bc75-6ntkb 0/1 Pending 0 12m coredns-7f89b7bc75-cph9t 0/1 Pending 0 12m etcd-ws-k8s-master1 1/1 Running 0 12m kube-apiserver-ws-k8s-master1 1/1 Running 1 12m kube-controller-manager-ws-k8s-master1 1/1 Running 0 12m kube-proxy-2sltc 1/1 Running 0 12m kube-scheduler-ws-k8s-master1 1/1 Running 0 12m kubectl apply -f calico.yaml kubectl get nodes NAME STATUS ROLES AGE VERSION ws-k8s-master1 Ready control-plane,master 34m v1.20.6 ws-k8s-node1 Ready <none> 14m v1.20.6 ws-k8s-node2 Ready <none> 13m v1.20 kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-6949477b58-z9gk4 1/1 Running 0 4m15s calico-node-d6mvs 1/1 Running 0 4m15s calico-node-sx4t8 1/1 Running 0 4m15s calico-node-vdxvc 1/1 Running 0 4m15s coredns-7f89b7bc75-6ntkb 1/1 Running 0 35m coredns-7f89b7bc75-cph9t 1/1 Running 0 35m etcd-ws-k8s-master1 1/1 Running 0 36m kube-apiserver-ws-k8s-master1 1/1 Running 1 36m kube-controller-manager-ws-k8s-master1 1/1 Running 0 36m kube-proxy-2sltc 1/1 Running 0 35m kube-proxy-ndfn7 1/1 Running 0 15m kube-proxy-rmdfb 1/1 Running 0 16m kube-scheduler-ws-k8s-master1 1/1 Running 0 36m

k8s1.26搭建 环境

环境初始化 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 hostnamectl set-hostname ws-k8s-master1 && bash nmcli con modify ens33 ipv4.addresses 192.168.8.160/24 ipv4.gateway 192.168.8.2 ipv4.dns 192.168.8.2 ipv4.method manual nmcli con up ens33 yum install -y device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack telnet ipvsadm yum -y update sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config setenforce 0 systemctl disable firewalld.service --now echo " 192.168.8.160 ws-k8s-master1 192.168.8.161 ws-k8s-node1 " >> /etc/hostsswapoff -a sed -i '$ s/^/#/' /etc/fstab ssh-keygen ssh-copy-id ws-k8s-node1 modprobe br_netfilter cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF sysctl -p /etc/sysctl.d/k8s.conf echo " [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=0 " >> /etc/yum.repos.d/kubernetes.repoyum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo sed -i 's/^server/#server/g' /etc/chrony.conf sed -i '1s/^/server cn.pool.ntp.org iburst\n/' /etc/chrony.conf reboot hostnamectl set-hostname ws-k8s-node1 && bash nmcli con modify ens33 ipv4.addresses 192.168.8.161/24 ipv4.gateway 192.168.8.2 ipv4.dns 192.168.8.2 ipv4.method manual nmcli con up ens33 yum install -y device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack telnet ipvsadm yum -y update sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config setenforce 0 systemctl disable firewalld.service --now echo " 192.168.8.160 ws-k8s-master1 192.168.8.161 ws-k8s-node1 " >> /etc/hostsswapoff -a sed -i '$ s/^/#/' /etc/fstab modprobe br_netfilter cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF sysctl -p /etc/sysctl.d/k8s.conf echo " [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=0 " >> /etc/yum.repos.d/kubernetes.repoyum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo sed -i 's/^server/#server/g' /etc/chrony.conf sed -i '1s/^/server cn.pool.ntp.org iburst\n/' /etc/chrony.conf reboot

安装containerd 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 yum install -y containerd.io-1.6.6 mkdir -p /etc/containerdmkdir /etc/containerd/certs.d/docker.io/ -pecho '[host."https://bsx9xf1d.mirror.aliyuncs.com",host."https://registry.docker-cn.com"] capabilities = ["pull"] ' >> /etc/containerd/certs.d/docker.io/hosts.tomlcontainerd config default > /etc/containerd/config.toml 修改 vim /etc/containerd/config.toml SystemdCgroup = True sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.7" config_path = "/etc/containerd/certs.d" systemctl enable containerd.service --now cat > /etc/crictl.yaml <<EOF runtime-endpoint: unix:///run/containerd/containerd.sock image-endpoint: unix:///run/containerd/containerd.sock timeout: 10 debug: false EOF systemctl restart containerd yum install -y docker-ce && systemctl enable docker --now echo ' {"registry-mirrors": ["https://bsx9xf1d.mirror.aliyuncs.com"], "exec-opts": ["native.cgroupdriver=systemd"] }' > /etc/docker/daemon.jsonsystemctl daemon-reload systemctl restart docker yum install -y containerd.io-1.6.6 mkdir -p /etc/containerdcontainerd config default > /etc/containerd/config.toml vim /etc/containerd/config.toml 修改 SystemdCgroup = True sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.7" config_path = "/etc/containerd/certs.d" mkdir /etc/containerd/certs.d/docker.io/ -pecho '[host."https://bsx9xf1d.mirror.aliyuncs.com",host."https://registry.docker-cn.com"] capabilities = ["pull"] ' >> /etc/containerd/certs.d/docker.io/hosts.tomlsystemctl enable containerd.service --now cat > /etc/crictl.yaml <<EOF runtime-endpoint: unix:///run/containerd/containerd.sock image-endpoint: unix:///run/containerd/containerd.sock timeout: 10 debug: false EOF systemctl restart containerd yum install -y docker-ce && systemctl enable docker --now echo ' {"registry-mirrors": ["https://bsx9xf1d.mirror.aliyuncs.com"], "exec-opts": ["native.cgroupdriver=systemd"] }' > /etc/docker/daemon.jsonsystemctl daemon-reload systemctl restart docker

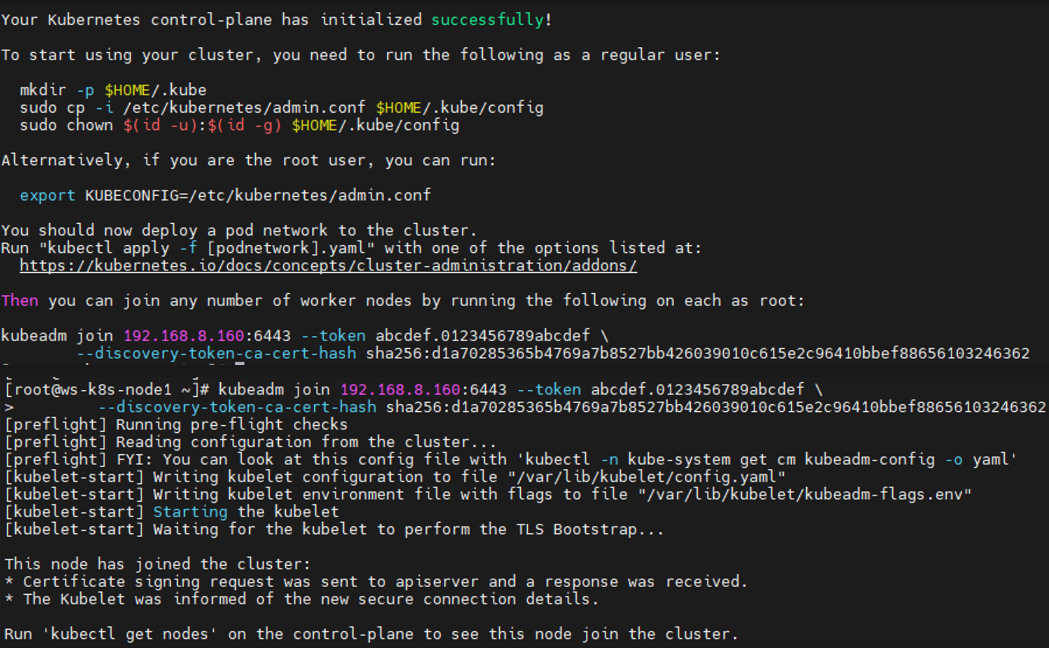

安装k8s 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 yum install -y kubelet-1.26.0 yum install -y kubeadm-1.26.0 yum install -y kubectl-1.26.0 systemctl enable kubelet crictl config runtime-endpoint unix:///run/containerd/containerd.sock kubeadm config print init-defaults > kubeadm.yaml vim kubeadm.yaml 修改: advertiseAddress: 192.168.8.160 criSocket: unix:///run/containerd/containerd.sock name: ws-k8s-master1 imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers kubernetesVersion: 1.26.0 在networking下添加: podSubnet: 10.10.0.0/16 echo '--- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs #kube代理模式为ipvs --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration #cgroup驱动使用systemd cgroupDriver: systemd' >> kubeadm.yamlkubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification mkdir -p $HOME /.kube sudo cp -i /etc/kubernetes/admin.conf $HOME /.kube/config sudo chown $(id -u):$(id -g) $HOME /.kube/config kubectl get nodes kubeadm token create --print-join-command kubeadm join 192.168.8.160:6443 --token oa7h1w.79oq2ol0w3jqcgud \ --discovery-token-ca-cert-hash sha256:d1a70285365b4769a7b8527bb426039010c615e2c96410bbef88656103246362 \ --ignore-preflight-errors=SystemVerification

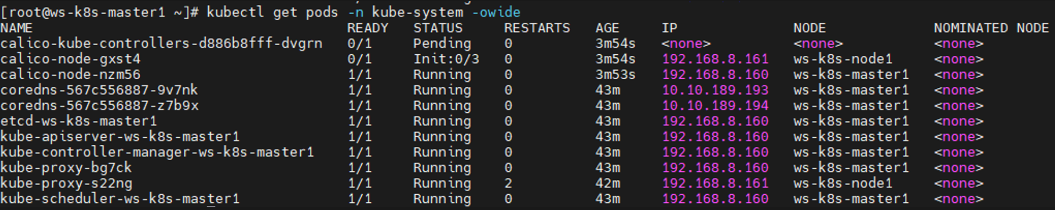

安装Calico 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 kubectl get node NAME STATUS ROLES AGE VERSION ws-k8s-master1 NotReady control-plane 30m v1.26.0 ws-k8s-node1 NotReady <none> 28m v1.26.0 kubectl get pods -n kube-system -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES coredns-567c556887-9v7nk 0/1 Pending 0 29m <none> <none> <none> <none> coredns-567c556887-z7b9x 0/1 Pending 0 29m <none> <none> <none> <none> etcd-ws-k8s-master1 1/1 Running 0 29m 192.168.8.160 ws-k8s-master1 <none> <none> kube-apiserver-ws-k8s-master1 1/1 Running 0 29m 192.168.8.160 ws-k8s-master1 <none> <none> kube-controller-manager-ws-k8s-master1 1/1 Running 0 29m 192.168.8.160 ws-k8s-master1 <none> <none> kube-proxy-bg7ck 1/1 Running 0 29m 192.168.8.160 ws-k8s-master1 <none> <none> kube-proxy-s22ng 1/1 Running 1 28m 192.168.8.161 ws-k8s-node1 <none> <none> kube-scheduler-ws-k8s-master1 1/1 Running 0 29m 192.168.8.160 ws-k8s-master1 <none> <none> kubectl apply -f calico.yaml kubectl get pods -n kube-system -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-d886b8fff-795k8 0/1 Pending 0 4m33s <none> <none> <none> <none> calico-node-hcfmw 0/1 Init:0/3 0 4m32s 192.168.8.161 ws-k8s-node1 <none> <none> calico-node-vds28 0/1 Init:0/3 0 4m33s 192.168.8.160 ws-k8s-master1 <none> <none> kubectl delete -f calico.yaml ctr -n=k8s.io images import calico.tar.gz kubectl apply -f calico.yaml kubectl get pods -n kube-system -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-d886b8fff-nc6mm 1/1 Running 0 21s 10.10.179.1 ws-k8s-node1 <none> <none> calico-node-4rj9m 1/1 Running 0 21s 192.168.8.161 ws-k8s-node1 <none> <none> calico-node-gn6gm 1/1 Running 0 21s 192.168.8.160 ws-k8s-master1 <none> <none> coredns-567c556887-9v7nk 1/1 Running 0 77m 10.10.189.193 ws-k8s-master1 <none> <none> coredns-567c556887-z7b9x 1/1 Running 0 77m 10.10.189.194 ws-k8s-master1 <none> <none> etcd-ws-k8s-master1 1/1 Running 0 77m 192.168.8.160 ws-k8s-master1 <none> <none> kube-apiserver-ws-k8s-master1 1/1 Running 0 77m 192.168.8.160 ws-k8s-master1 <none> <none> kube-controller-manager-ws-k8s-master1 1/1 Running 0 77m 192.168.8.160 ws-k8s-master1 <none> <none> kube-proxy-bg7ck 1/1 Running 0 77m 192.168.8.160 ws-k8s-master1 <none> <none> kube-proxy-s22ng 1/1 Running 0 75m 192.168.8.161 ws-k8s-node1 <none> <none> kube-scheduler-ws-k8s-master1 1/1 Running 0 77m 192.168.8.160 ws-k8s-master1 <none> <none> [root@ws-k8s-master1 ~] NAME STATUS ROLES AGE VERSION ws-k8s-master1 Ready control-plane 77m v1.26.0 ws-k8s-node1 Ready <none> 76m v1.26.0 ctr -n k8s.io images pull docker.io/library/busybox:1.28 kubectl run busybox --image docker.io/library/busybox:1.28 \ --image-pull-policy=IfNotPresent --restart=Never --rm -it busybox -- sh ping www.baidu.com 64 bytes from 180.101.50.188: seq =0 ttl=127 time=22.460 ms 64 bytes from 180.101.50.188: seq =1 ttl=127 time=16.696 ms nslookup kubernetes.default.svc.cluster.local Server: 10.96.0.10 Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local Name: kubernetes.default.svc.cluster.local Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

ctr和crictl的区别 ctr是containerd自带的CLI命令行工具

扩容k8s集群 添加工作节点 192.168.8.162 ws-k8s-node2

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 echo "192.168.8.161 ws-k8s-node2 " >> /etc/hostsssh-copy-id ws-k8s-node2 kubeadm token create --print-join-command echo "192.168.8.161 ws-k8s-node2 " >> /etc/hostshostnamectl set-hostname ws-k8s-node2 && bash nmcli con modify ens33 ipv4.addresses 192.168.8.162/24 ipv4.gateway 192.168.8.2 ipv4.dns 192.168.8.2 ipv4.method manual nmcli con up ens33 yum install -y device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack telnet ipvsadm yum -y update sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config setenforce 0 systemctl disable firewalld.service --now echo " 192.168.8.160 ws-k8s-master1 192.168.8.161 ws-k8s-node1 192.168.8.161 ws-k8s-node2 " >> /etc/hostsswapoff -a sed -i '$ s/^/#/' /etc/fstab modprobe br_netfilter cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF sysctl -p /etc/sysctl.d/k8s.conf echo " [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=0 " >> /etc/yum.repos.d/kubernetes.repoyum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo sed -i 's/^server/#server/g' /etc/chrony.conf sed -i '1s/^/server cn.pool.ntp.org iburst\n/' /etc/chrony.conf yum install -y containerd.io-1.6.6 mkdir -p /etc/containerdcontainerd config default > /etc/containerd/config.toml vim /etc/containerd/config.toml 修改 SystemdCgroup = True sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.7" config_path = "/etc/containerd/certs.d" mkdir /etc/containerd/certs.d/docker.io/ -pecho '[host."https://bsx9xf1d.mirror.aliyuncs.com",host."https://registry.docker-cn.com"] capabilities = ["pull"] ' >> /etc/containerd/certs.d/docker.io/hosts.tomlsystemctl enable containerd.service --now cat > /etc/crictl.yaml <<EOF runtime-endpoint: unix:///run/containerd/containerd.sock image-endpoint: unix:///run/containerd/containerd.sock timeout: 10 debug: false EOF systemctl restart containerd yum install -y docker-ce && systemctl enable docker --now echo ' {"registry-mirrors": ["https://bsx9xf1d.mirror.aliyuncs.com"], "exec-opts": ["native.cgroupdriver=systemd"] }' > /etc/docker/daemon.jsonsystemctl daemon-reload systemctl restart docker yum install -y kubelet-1.26.0 kubeadm-1.26.0 kubectl-1.26.0 systemctl enable kubelet reboot kubeadm join 192.168.8.160:6443 --token h5lkkm.dsybifhcfj9okvbj \ --discovery-token-ca-cert-hash sha256:d1a70285365b4769a7b8527bb426039010c615e2c96410bbef88656103246362 \

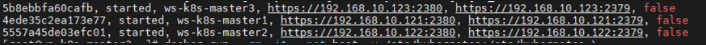

k8s1.28高可用搭建 master1 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 hostnamectl set-hostname ws-k8s-master1 && bash nmcli con modify ens18 ipv4.addresses 192.168.10.121/24 ipv4.gateway 192.168.10.1 ipv4.dns 192.168.1.1 ipv4.method manual nmcli con up ens18 yum install -y device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack telnet ipvsadm yum -y update sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config setenforce 0 systemctl disable firewalld.service --now echo " 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.10.100 1panel 192.168.10.120 pve 192.168.10.121 ws-k8s-master1 192.168.10.122 ws-k8s-master2 192.168.10.123 ws-k8s-master3 192.168.10.130 harbor 192.168.10.131 ws-k8s-node1 192.168.10.132 ws-k8s-node2 192.168.10.133 ws-k8s-node3 192.168.10.140 docker-host 192.168.10.141 ceph-node1 192.168.10.142 ceph-node2 192.168.10.143 ceph-node3 " > /etc/hostsswapoff -a sed -i '$ s/^/#/' /etc/fstab modprobe br_netfilter cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF sysctl -p /etc/sysctl.d/k8s.conf yum -y install yum-utils yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo sysctl -p /etc/sysctl.d/k8s.conf echo " [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=0 " >> /etc/yum.repos.d/kubernetes.repoyum -y install chrony sed -i 's/^server/#server/g' /etc/chrony.conf sed -i '1s/^/server cn.pool.ntp.org iburst\n/' /etc/chrony.conf yum install -y containerd.io-1.6.6 mkdir -p /etc/containerdmkdir /etc/containerd/certs.d/docker.io/ -pecho '[host."https://hub-mirror.c.163.com",host."https://docker.m.daocloud.io", host."https://ghcr.io",host."https://mirror.baidubce.com",host."https://docker.nju.edu.cn"] capabilities = ["pull"] ' > /etc/containerd/certs.d/docker.io/hosts.tomlcontainerd config default > /etc/containerd/config.toml 修改 vim /etc/containerd/config.toml SystemdCgroup = true sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.7" config_path = "/etc/containerd/certs.d" cat > /etc/crictl.yaml <<EOF runtime-endpoint: unix:///run/containerd/containerd.sock image-endpoint: unix:///run/containerd/containerd.sock timeout: 10 debug: false EOF systemctl enable containerd.service --now systemctl restart containerd yum install -y docker-ce && systemctl enable docker --now cat > /etc/docker/daemon.json << EOF { "registry-mirrors": [ "https://hub-mirror.c.163.com", "https://docker.m.daocloud.io", "https://ghcr.io", "https://mirror.baidubce.com", "https://docker.nju.edu.cn" ] } EOF systemctl daemon-reload systemctl restart docker yum install -y kubelet-1.28.1 kubeadm-1.28.1 kubectl-1.28.1 systemctl enable kubelet kubeadm config print init-defaults > kubeadm.yaml vim kubeadm.yaml 修改: advertiseAddress: 192.168.10.121 criSocket: unix:///run/containerd/containerd.sock name: ws-k8s-master1 imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers kubernetesVersion: 1.28.0 在networking下添加: podSubnet: 10.244.0.0/16 echo '--- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs #kube代理模式为ipvs --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration #cgroup驱动使用systemd cgroupDriver: systemd' >> kubeadm.yamlreboot kubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification mkdir -p $HOME /.kube sudo cp -i /etc/kubernetes/admin.conf $HOME /.kube/config sudo chown $(id -u):$(id -g) $HOME /.kube/config kubectl get nodes kubeadm token create --print-join-command kubeadm join 192.168.10.121:6443 --token fz8d9z.o5csc8a17ilub13g --discovery-token-ca-cert-hash sha256:c6fe90eb5632c6e422b694d1392722bed65fd768497a98cc75dcab8589ad35a7

master节点加入 #证书

#把master1的证书放到master2上

#master1:检查kubeadm-config ConfigMap

#master2加入集群,–control-plane表示添加控制节点

kubeadm join 192.168.10.121:6443 –token 3cux79.jadpr1rx79h85er5 –discovery-token-ca-cert-hash sha256:bc2f349ee80ea509d925320d3fa7121b32f978071f9d0d3e612b4a3aff311664

#master3

node与节点加入 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 hostnamectl set-hostname ws-k8s-node1 && bash nmcli con modify ens18 ipv4.addresses 192.168.10.131/24 ipv4.gateway 192.168.10.1 ipv4.dns 192.168.1.1 ipv4.method manual nmcli con up ens18 yum install -y device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack telnet ipvsadm yum -y update sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config setenforce 0 systemctl disable firewalld.service --now echo " 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.10.100 1panel 192.168.10.120 pve 192.168.10.121 ws-k8s-master1 192.168.10.122 ws-k8s-master2 192.168.10.123 ws-k8s-master3 192.168.10.130 harbor 192.168.10.131 ws-k8s-node1 192.168.10.132 ws-k8s-node2 192.168.10.133 ws-k8s-node3 192.168.10.140 docker-host" > /etc/hostsswapoff -a sed -i '$ s/^/#/' /etc/fstab modprobe br_netfilter cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF yum -y install yum-utils yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo sysctl -p /etc/sysctl.d/k8s.conf echo " [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=0 " >> /etc/yum.repos.d/kubernetes.repoyum -y install chrony sed -i 's/^server/#server/g' /etc/chrony.conf sed -i '1s/^/server cn.pool.ntp.org iburst\n/' /etc/chrony.conf yum install -y containerd.io-1.6.6 mkdir -p /etc/containerdcontainerd config default > /etc/containerd/config.toml vim /etc/containerd/config.toml 修改 SystemdCgroup = true sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.7" config_path = "/etc/containerd/certs.d" mkdir /etc/containerd/certs.d/docker.io/ -pecho '[host."https://hub-mirror.c.163.com",host."https://docker.m.daocloud.io", host."https://ghcr.io",host."https://mirror.baidubce.com",host."https://docker.nju.edu.cn"] capabilities = ["pull"] ' > /etc/containerd/certs.d/docker.io/hosts.tomlsystemctl enable containerd.service --now cat > /etc/crictl.yaml <<EOF runtime-endpoint: unix:///run/containerd/containerd.sock image-endpoint: unix:///run/containerd/containerd.sock timeout: 10 debug: false EOF systemctl restart containerd yum install -y docker-ce && systemctl enable docker --now cat > /etc/docker/daemon.json << EOF { "registry-mirrors": [ "https://hub-mirror.c.163.com", "https://docker.m.daocloud.io", "https://ghcr.io", "https://mirror.baidubce.com", "https://docker.nju.edu.cn"] } EOF systemctl daemon-reload systemctl restart docker yum install -y kubelet-1.28.1 kubeadm-1.28.1 kubectl-1.28.1 systemctl enable kubelet reboot kubeadm join 192.168.10.121:6443 --token 2x0xe5.erf0z44xtaciwbh7 --discovery-token-ca-cert-hash sha256:c6fe90eb5632c6e422b694d1392722bed65fd768497a98cc75dcab8589ad35a7

calico calico的yaml在github有https://docs.tigera.io/calico/latest/getting-started/kubernetes/requirements

1 2 ctr -n k8s.io images import calico.tar.gz kubectl apply -f calico.yaml

高可用部分 keepalive+nginx做apiserver高可用 配置文件在后面

master1与master2安装nginx和keepalive,且调整配置文件

systemctl daemon-reload

测试

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 ip a | grep 192.168 inet 192.168.10.121/24 brd 192.168.10.255 scope global noprefixroute ens18 inet 192.168.10.200/24 scope global secondary ens18 systemctl stop keepalived.service ip a | grep 192.168 inet 192.168.10.122/24 brd 192.168.10.255 scope global noprefixroute ens18 inet 192.168.10.200/24 scope global secondary ens18 systemctl start keepalived.service ip a | grep 192.168 inet 192.168.10.121/24 brd 192.168.10.255 scope global noprefixroute ens18 inet 192.168.10.200/24 scope global secondary ens18

配置文件 nginx:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 user nginx; worker_processes auto; error_log /var/log/nginx/error.log; pid /run/nginx.pid; include /usr/share/nginx/modules/*.conf; events { worker_connections 1024; } stream { log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent' ; access_log /var/log/nginx/k8s-access.log main; upstream k8s-apiserver { server 192.168.10.121:6443 weight=5 max_fails=3 fail_timeout=30s; server 192.168.10.122:6443 weight=5 max_fails=3 fail_timeout=30s; server 192.168.10.123:6443 weight=5 max_fails=3 fail_timeout=30s; } server { listen 16443; proxy_pass k8s-apiserver; } } http { log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"' ; access_log /var/log/nginx/access.log main; sendfile on; tcp_nopush on; tcp_nodelay on; keepalive_timeout 65; types_hash_max_size 2048; include /etc/nginx/mime.types; default_type application/octet-stream; server { listen 80 default_server; server_name _; location / { } } }

主keepalive

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_MASTER } vrrp_script check_nginx { script "/etc/keepalived/check_nginx.sh" } vrrp_instance VI_1 { state MASTER interface ens18 virtual_router_id 51 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.10.200/24 } track_script { check_nginx } }

备keepalive

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_MASTER } vrrp_script check_nginx { script "/etc/keepalived/check_nginx.sh" } vrrp_instance VI_1 { state BACKUP interface ens18 virtual_router_id 51 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.10.200/24 } track_script { check_nginx } }

check_nginx.sh

1 2 3 4 5 6 7 8 9 10 11 12 13 14 #!/bin/bash counter=$(ps -ef |grep nginx | grep sbin | egrep -cv "grep|$$" ) if [ $counter -eq 0 ]; then service nginx start sleep 2 counter=$(ps -ef |grep nginx | grep sbin | egrep -cv "grep|$$" ) if [ $counter -eq 0 ]; then service keepalived stop fi fi

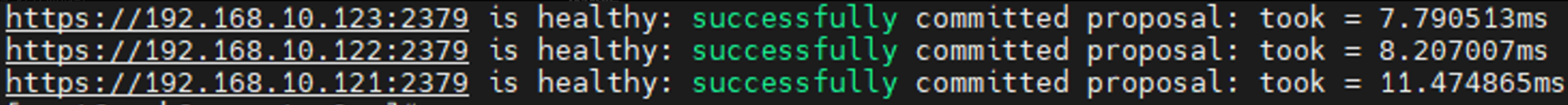

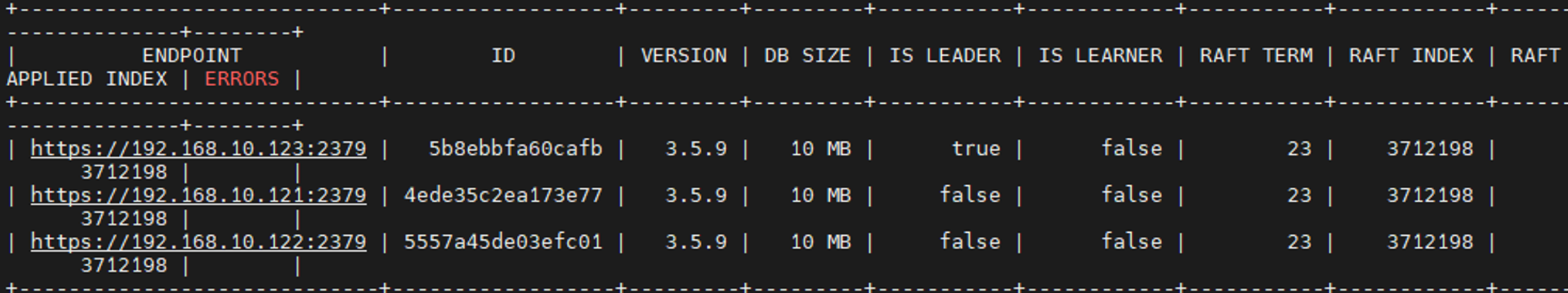

etcd数据库高可用 1 2 3 4 5 6 修改所有master节点的etcd.yaml vim /etc/kubernetes/manifests/etcd.yaml --initial-cluster=ws-k8s-master1=https://192.168.10.121:2380,ws-k8s-master2=https://192.168.10.122:2380,ws-k8s-master3=https://192.168.10.123:2380 systemctl restart kubelet

测试

1 2 3 4 docker run --rm -it --net host -v /etc/kubernetes:/etc/kubernetes \ registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.4-0 etcdctl \ --cert /etc/kubernetes/pki/etcd/peer.crt --key /etc/kubernetes/pki/etcd/peer.key \ --cacert /etc/kubernetes/pki/etcd/ca.crt member list

1 2 3 4 5 docker run --rm -it --net host -v /etc/kubernetes:/etc/kubernetes \ registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.4-0 etcdctl \ --cert /etc/kubernetes/pki/etcd/peer.crt --key /etc/kubernetes/pki/etcd/peer.key \ --cacert /etc/kubernetes/pki/etcd/ca.crt \ --endpoints=https://192.168.10.121:2379,https://192.168.10.122:2379,https://192.168.10.123:2379 endpoint health --cluster

1 2 3 4 5 docker run --rm -it --net host -v /etc/kubernetes:/etc/kubernetes \ registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.4-0 etcdctl \ -w table --cert /etc/kubernetes/pki/etcd/peer.crt \ --key /etc/kubernetes/pki/etcd/peer.key --cacert /etc/kubernetes/pki/etcd/ca.crt \ --endpoints=https://192.168.10.121:2379,https://192.168.10.122:2379,https://192.168.10.123:2379 endpoint status --cluster

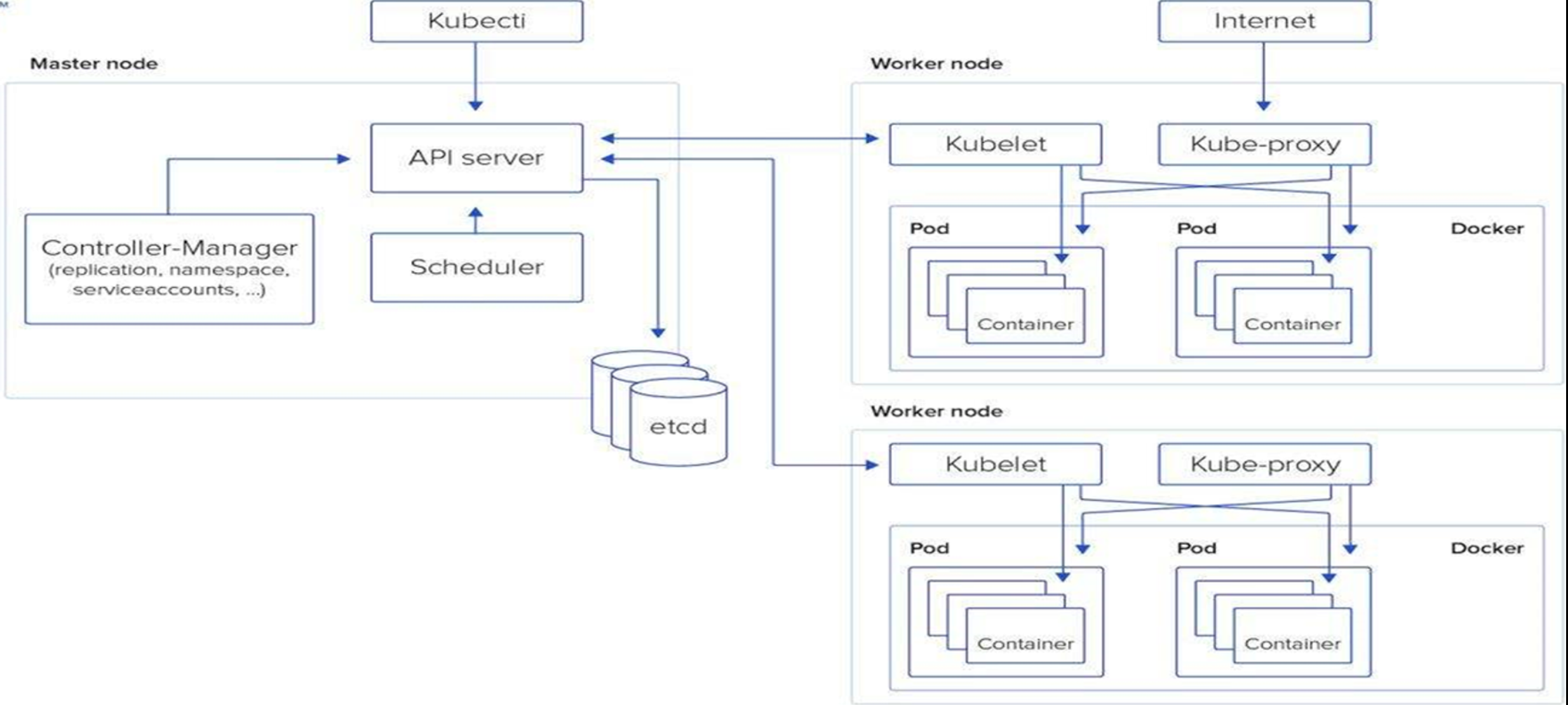

k8s概述

pod是k8s的最小单位

HPA自动扩容与缩容

VPA自动调节pod的资源请求

高可用架构:使用keepalive+lvs对API server做高可用

K8s集群至少需要一个主节点(Master)和多个工作节点(Worker)

常用组件:

k8s的资源对象

1.pod

2.Replicaset

3.Deployment

4.Service

6.Statefulset

7.Job & CronJob

pod资源 pod概述 https://kubernetes.io/zh/

https://kubernetes.io/zh-cn/docs/concepts/workloads/pods/

Pod是Kubernetes中的最小调度单元,k8s都是以pod的方式运行服务的

pod需要调度到工作节点运行,节点的选择由scheduler调度器实现

pod定义时,会定义init容器、应用容器与业务容器

init用以对主容器做初始化操作,查看服务是否正常

pod网络

kubectl get pods -n kube-system -owide #查看kube-system命名空间中的pod

部分控制节点组件是和管理节点共享ip地址,除此之外的pod都是唯一地址,通过calico网络插件分配

启动Pod时,会先启动⼀个pause 的容器,然后将后续的所有容器都 “link 到这个pause 的容器,以实现⽹络共享。

同一个pod内的容器会在共享环境中运行,共享同一个IP和端口

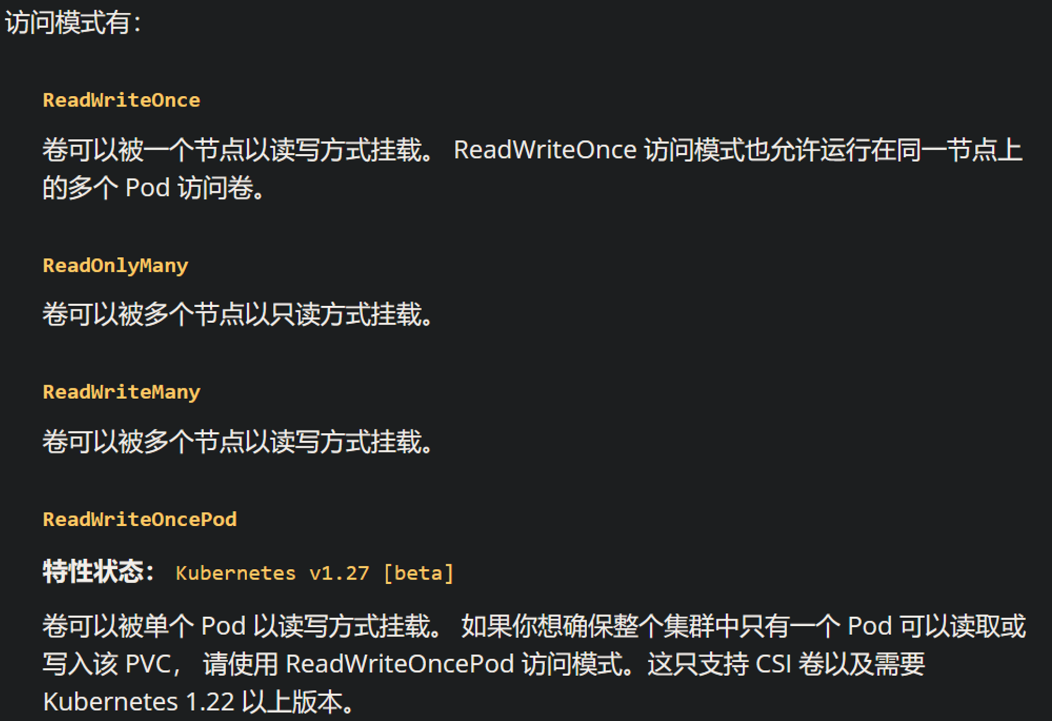

pod存储

通过挂在存储卷,可以让所有容器访问共享卷,允许共享数据

pod和容器对比

pod是容器组成的集合,可以作为一个或多个容器的载体

创建pod的方式

1.使用yaml文件来创建

2.使用kubectl run创建pod

pod运行方式

1、自主式pod:直接定义一个pod资源

kubectl apply -f pod-tomcat.yaml

kubectl get pods -o wide

kubectl delete pods tomcat-test

2、控制器管理的Pod

常见的管理Pod的控制器:Replicaset、Deployment、Job、CronJob、Daemonset、Statefulset。

以下是一个举例的yaml文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 apiVersion: apps/v1 kind: Deployment metadata: name: nginx-test labels: app: nginx-deploy spec: selector: matchLabels: app: nginx replicas: 2 template: metadata: labels: app: nginx spec: containers: - name: my-nginx image: xianchao/nginx:v1 imagePullPolicy: IfNotPresent ports: - containerPort: 80

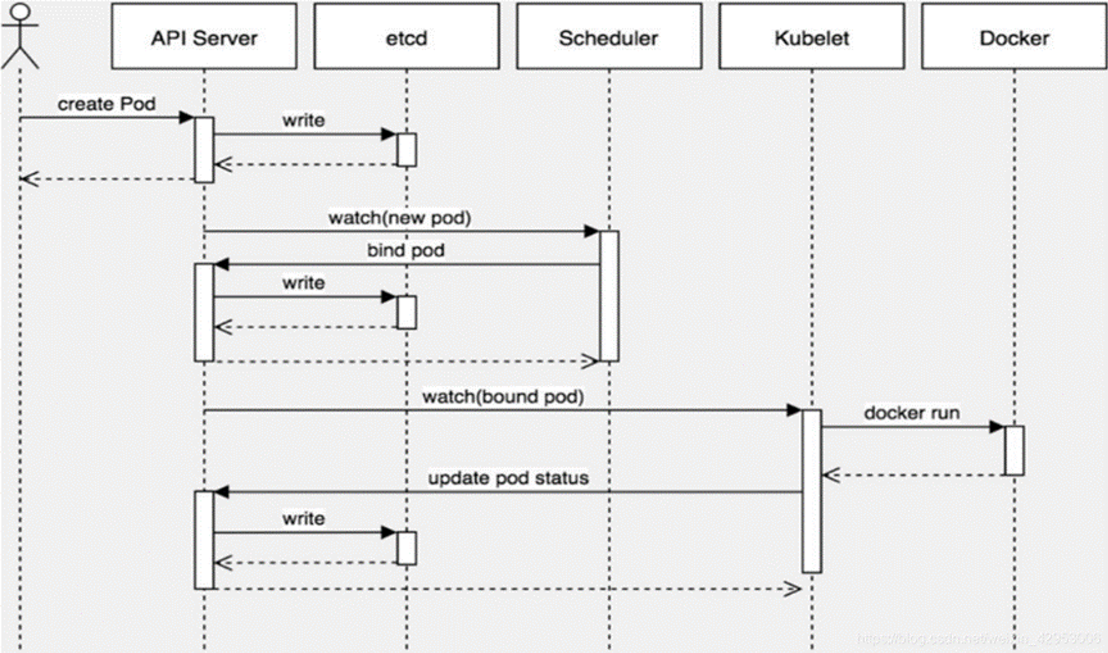

pod创建的步骤

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 kubectl会寻找环境变量kubeconfig 如果没有这个环境变量,会找/root/.kube/config文件 可以通过kubectl config view查看/root/.kube/config内容 kubectl config view apiVersion: v1 clusters: - cluster: certificate-authority-data: DATA+OMITTED server: https://192.168.8.160:6443 name: kubernetes contexts: - context: cluster: kubernetes user: kubernetes-admin name: kubernetes-admin@kubernetes current-context: kubernetes-admin@kubernetes kind: Config preferences: {} users :- name: kubernetes-admin user: client-certificate-data: DATA+OMITTED client-key-data: DATA+OMITTED

1 2 3 4 5 6 7 8 9 10 pod创建过程 1.**kubectl apply -f nginx-deploy.yaml** 找config文件,基于config文件访问指定的集群,找到api-server,把信息给api-server 2.api-server把kubectl的参数或者yaml的参数,写入etcd api-server把pod信息给scheduler调度器,调度器进行调度,并且把调度节点的信息写到etcd 3.api-server调用kubelet,kubelet调用容器运行时docker/container 4.容器运行时把pod信息返回给api-server,写入etcd

pod的创建 通过kubectl run来创建pod 1 2 3 4 5 6 7 kubectl run --help Usage: kubectl run NAME --image=image [--env="key=value" ] [--port=port ] [--dry-run=server|client ] [--overrides=inline-json ] [--command ] -- [COMMAND ] [args... ] [options ] kubectl run tomcat --image=ws/tomcat --image-pull-policy='IfNotPresent' \ --port=8080

通过yaml文件创建,yaml文件简单写法 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 kubectl explain pod kubectl explain pod.metadata yaml的格式:每个字段都比上级字段多空两格,加短横-可以表示以下同级 vim first-pod apiVersion: v1 kind: Pod metadata: annotations: worker: "ws" labels: app: tomcat name: ws-tomcat namespace: default spec: activeDeadlineSeconds: containers: - name: tomcat image: docker.io/library/tomcat imagePullPolicy: IfNotPresent ports: - containerPort: 8080 hostPort: hostIP: kubectl apply -f pod-first.yaml kubectl get pods NAME READY STATUS RESTARTS AGE nginx-test-5b48846ff4-7n4f6 1 /1 Running 0 122m nginx-test-5b48846ff4-mq5tm 1 /1 Running 0 122m ws-tomcat 1 /1 Running 0 50s

pod简单操作 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 kubectl exec -it ws-tomcat -- /bin/bash kubectl exec -it ws-tomcat -c tomcat -- /bin/bash kubectl get pod kubectl get pod -owide master1 2 node1 2 都可以访问pod curl 10.10 .234 .68 :8080 kubectl get pod -l app=tomcat kubectl logs ws-tomcat kubectl describe pods ws-tomcat kubectl delete pods ws-tomcat kubectl delete -f pod-first.yaml kubectl apply -f pod-first.yaml kubectl get pods --show-labels

命名空间与资源配额 命名空间(Namespace)是Kubernetes中用于隔离和组织资源的一种机制。它可以将集群中的资源划分为逻辑上独立的单元,使不同的团队、项目或应用程序可以在同一个集群中共享底层基础设施,同时保持彼此之间的隔离性。

通过使用命名空间,将不同的资源(如Pod、Service、Deployment等)组织在一起,并为它们提供唯一的名称。这样可以避免资源名称的冲突,并提供更好的资源管理和权限控制。

简单一句话:进行各种的资源隔离

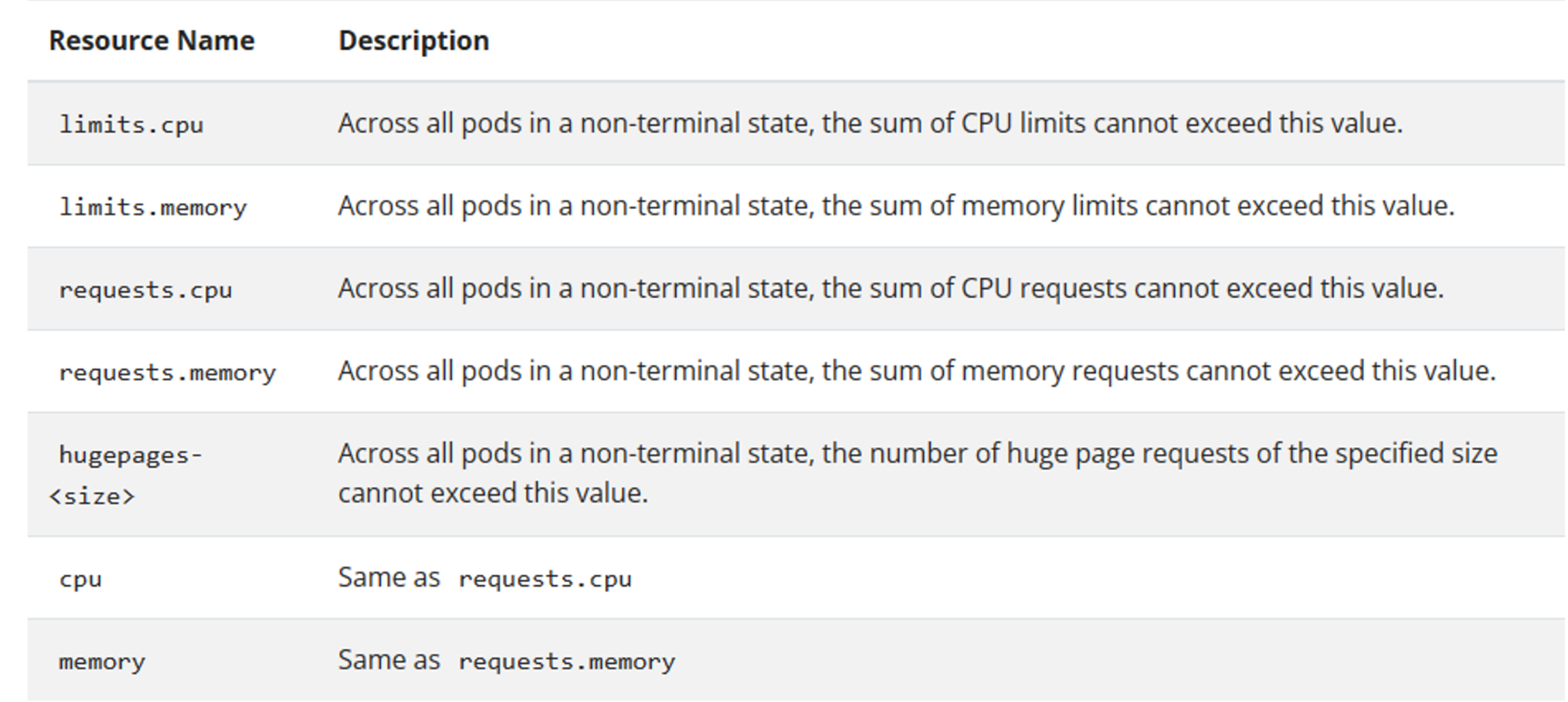

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 kubectl create ns ws kubectl get ns NAME STATUS AGE default Active 18h kube-node-lease Active 18h kube-public Active 18h kube-system Active 18h ws Active 7s kubectl explain resourcequota kubectl explain resourcequota.metadata kubectl explain resourcequota.spec vim ns-quota.yaml apiVersion: v1 KIND: ResourceQuota metadata: name: cpu-quota namespace: ws spec: hard: limits.cpu: "4" limits.memory: 4Gi requests.cpu: "2" requests.memory: 2Gi kubectl apply -f ns-quota.yaml kubectl get resourcequota -n ws NAME AGE REQUEST LIMIT cpu-quota 75s requests.cpu: 0/2 limits.cpu: 0 /4

pod的标签 标签label是一个键值对,能够通过标判断对象的特点,可以一开始创建pod的时候打标签,也可以创建之后打,大部分资源都可以打标签

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 kubectl label pods ws-tomcat user=ws kubectl get pods --show-labels kubectl get pods ws --show-labels kubectl get pods -l user NAME READY STATUS RESTARTS AGE ws-tomcat 1/1 Running 0 5m kubectl get pods -l user=ws kubectl get pods -L user NAME READY STATUS RESTARTS AGE USER nginx-test-5b48846ff4-7n4f6 1/1 Running 1 (68m ago) 28h nginx-test-5b48846ff4-mq5tm 1/1 Running 1 (68m ago) 28h tomcat-test 1/1 Running 0 7m3s ws-tomcat 1/1 Running 0 6m49s ws kubectl get pods --all-namespaces --show-labels

pod资源亲和性 node调度策略nodeName和nodeSelector 在创建pod等资源时,可以通过调整字段进行node调度,指定资源调度到满足何种条件的node

指定nodeName 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 vim testpod1.yaml apiVersion: v1 kind: Pod metadata: name: testpod1 namespace: default labels: app: tomcat spec: nodeName: ws-k8s-node1 containers: - name: test image: docker.io/library/tomcat imagePullPolicy: IfNotPresent kubectl apply -f testpod1.yaml kubectl get pods testpod1 1 /1 Running 0 116s 10.10 .179 .9 ws-k8s-node1 <none> <none>

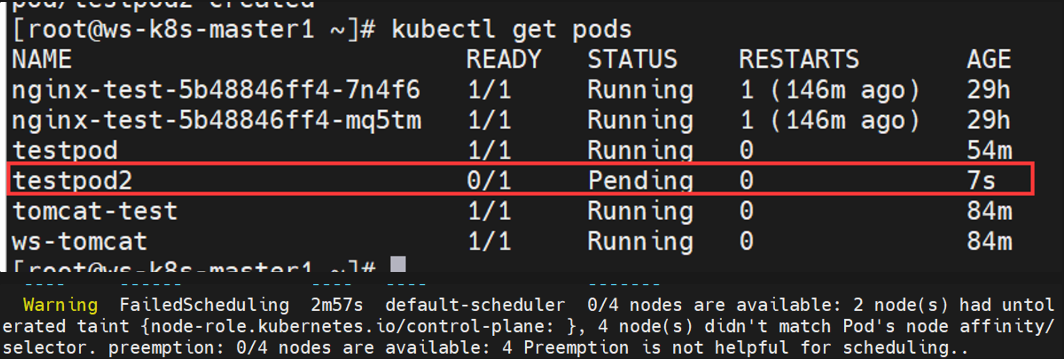

指定nodeSelector 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 vim testpod2.yaml apiVersion: v1 kind: Pod metadata: name: testpod2 namespace: default labels: app: tomcat spec: nodeSelector: admin: ws containers: - name: test image: docker.io/library/tomcat imagePullPolicy: IfNotPresent kubectl apply -f testpod2.yaml 但因为我没有admin=ws标签的node,所以应用后pod处于pending状态 Examples: kubectl label nodes ws-k8s-node1 admin=ws kubectl get pods | grep testpod2 testpod2 1 /1 Running 0 11m kubectl label nodes ws-k8s-node1 admin- kubectl delete pods testpod2

如果同时使用nodeName和nodeSelector,则会报错亲和性错误,无法正常部署;

node亲和性、pod亲和性、pod反亲和性 亲和性在Kubernetes中起着重要作用,通过定义规则和条件,它允许我们实现精确的Pod调度、资源优化、高性能计算以及容错性和可用性的增强。通过利用亲和性,我们可以更好地管理和控制集群中的工作负载,并满足特定的业务需求。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 kubectl explain pods.spec.affinity RESOURCE: affinity <Object> DESCRIPTION: If specified, the pod's scheduling constraints Affinity is a group of affinity scheduling rules. FIELDS: nodeAffinity <Object> Describes node affinity scheduling rules for the pod. podAffinity <Object> Describes pod affinity scheduling rules (e.g. co-locate this pod in the same node, zone, etc. as some other pod(s)). podAntiAffinity <Object> Describes pod anti-affinity scheduling rules (e.g. avoid putting this pod in the same node, zone, etc. as some other pod(s)).

node节点亲和性 在创建pod时,会根据nodeaffinity来寻找最适合该pod的条件的node

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 kubectl explain pods.spec.affinity.nodeAffinity KIND: Pod VERSION: v1 RESOURCE: nodeAffinity <Object> DESCRIPTION: Describes node affinity scheduling rules for the pod. Node affinity is a group of node affinity scheduling rules. FIELDS: preferredDuringSchedulingIgnoredDuringExecution <[]Object> requiredDuringSchedulingIgnoredDuringExecution <Object> preferredDuringSchedulingIgnoredDuringExecution requiredDuringSchedulingIgnoredDuringExecution

硬亲和性 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 kubectl explain pods.spec.affinity.nodeAffinity.requiredDuringSchedulingIgnoredDuringExecution kubectl explain pods.spec.affinity.nodeAffinity.requiredDuringSchedulingIgnoredDuringExecution.nodeSelectorTerms FIELDS: matchExpressions <[]Object> A list of node selector requirements by node's labels. matchFields <[]Object> #匹配字段 A list of node selector requirements by node' s fields.kubectl explain pods.spec.affinity.nodeAffinity.requiredDuringSchedulingIgnoredDuringExecution.nodeSelectorTerms.matchExpressions key <string> -required- operator <string> -required- values <[]string> - `"DoesNotExist" ` - `"Exists" ` - `"Gt" ` - `"In" ` - `"Lt" ` - `"NotIn" ` vim ying-pod.yaml apiVersion: v1 kind: Pod metadata: name: ying-pod labels: app: tomcat user: ws spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: name opertor: In values: - ws - wss containers: - name: test1 namespace: default image: docker.io/library/tomcat imagePullPolicy: IfNotPresent kubectl apply -f ying-pod.yaml kubectl get pods | grep ying ying-pod 0/1 Pending 0 15m kubectl label nodes ws-k8s-node1 name=ws kubectl get pod -owide | grep ying ying-pod 0/1 ContainerCreating 0 80s <none> ws-k8s-node1 <none> <none> kubectl label nodes ws-k8s-node1 name-

软亲和性 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 vim ruan-pod.yaml apiVersion: v1 kind: Pod metadata: name: ruan-pod namespace: default spec: containers: - name: test image: docker.io/library/alpine imagePullPolicy: IfNotPresent affinity: nodeAffinity: preferredDuringSchedulingIgnoredDuringExecution: - preference: matchExpressions: - key: name operate: In values: - ws weight: 50 - preference: matchExpressions: - key: name operate: In values: - wws weight: 70 kubectl apply -f ruan-pod.yaml kubectl get pod -owide | grep ruan ruan-pod 0 /1 ContainerCreating 0 3m24s <none> ws-k8s-node2 <none> <none> kubectl label nodes ws-k8s-node1 name=ws kubectl delete -f ruan-pod.yaml kubectl apply -f ruan-pod.yaml kubectl get pods -owide | grep ruan ruan-pod 0 /1 ContainerCreating 0 2s <none> ws-k8s-node1 <none> <none> kubectl label nodes ws-k8s-node2 name=wss kubectl delete -f ruan-pod.yaml kubectl apply -f ruan-pod.yaml kubectl get pods -owide | grep ruan ruan-pod 0 /1 ContainerCreating 0 4m29s <none> ws-k8s-node1 <none> <none> ... - preference: matchExpressions: - key: name operator: In values: - ws weight: 50 - preference: matchExpressions: - key: names operator: In values: - wws weight: 70 ... kubectl label nodes ws-k8s-node2 names=wws kubectl delete -f ruan-pod.yaml kubectl apply -f ruan-pod.yaml kubectl get po -owide | grep ruan ruan-pod 0 /1 ContainerCreating 0 3m47s <none> ws-k8s-node2 <none> <none> kubectl label nodes ws-k8s-node1 name- kubectl label nodes ws-k8s-node2 names- kubectl delete -f ruan-pod.yaml kubectl delete -f ying-pod.yaml --fore --grace-period=0

pod亲和性与反亲和性 pod亲和性(podAffinity)有两种

2.podunaffinity,即两套完全相同,或两套完全不同功能的服务

那么如何判断是不是“同一个区域”就非常重要

1 2 3 4 5 6 kubectl explain pods.spec.affinity.podAffinity preferredDuringSchedulingIgnoredDuringExecution requiredDuringSchedulingIgnoredDuringExecution

pod亲和性

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 kubectl explain pods.spec.affinity.podAffinity.requiredDuringSchedulingIgnoredDuringExecution labelSelector <Object> namespaceSelector <Object> namespaces <[]string> topologyKey <string> -required- cat > qinhe-pod1.yaml << EOF apiVersion: v1 kind: Pod metadata: name: qinhe1 namespace: default labels: user: ws spec: containers: - name: qinhe1 image: docker.io/library/nginx imagePullPolicy: IfNotPresent EOF kubectl apply -f qinhe-pod1.yaml echo " apiVersion: v1 kind: Pod metadata: name: qinhe2 labels: app: app1 spec: containers: - name: qinhe2 image: docker.io/library/nginx imagePullPolicy: IfNotPresent affinity: podAffinity: # 和pod亲和性 requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: # 以标签为筛选条件 matchExpressions: # 以表达式进行匹配 - {key: user, operator: In, values: [" ws"]} topologyKey: kubernetes.io/hostname " > qinhe-pod2.yaml kubectl apply -f qinhe-pod2.yaml kubectl get pods -owide #因为hostname node1和node2不同,所以只会调度到node1 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES qinhe1 1/1 Running 0 68s 10.10.179.9 ws-k8s-node1 <none> <none> qinhe2 1/1 Running 0 21s 10.10.179.10 ws-k8s-node1 <none> <none> #修改 ... topologyKey: beta.kubernetes.io/arch ... #node1和node2这两个标签都相同 kubectl delete -f qinhe-pod2.yaml kubectl apply -f qinhe-pod2.yaml kubectl get pods -owide #再查看时会发现qinhe2分到了node2 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES qinhe1 1/1 Running 0 4m55s 10.10.179.9 ws-k8s-node1 <none> <none> qinhe2 1/1 Running 0 15s 10.10.234.68 ws-k8s-node2 <none> <none> #清理环境 kubectl delete -f qinhe-pod1.yaml kubectl delete -f qinhe-pod2.yaml

pod反亲和性

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 kubectl explain pods.spec.affinity.podAntiAffinity preferredDuringSchedulingIgnoredDuringExecution <[]Object> requiredDuringSchedulingIgnoredDuringExecution <[]Object> cat > qinhe-pod3.yaml << EOF apiVersion: v1 kind: Pod metadata: name: qinhe3 namespace: default labels: user: ws spec: containers: - name: qinhe3 image: docker.io/library/nginx imagePullPolicy: IfNotPresent EOF echo " apiVersion: v1 kind: Pod metadata: name: qinhe4 labels: app: app1 spec: containers: - name: qinhe4 image: docker.io/library/nginx imagePullPolicy: IfNotPresent affinity: podAntiAffinity: # 和pod亲和性 requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: # 以标签为筛选条件 matchExpressions: # 以表达式进行匹配 - {key: user, operator: In, values: [" ws"]} #表达式user=ws topologyKey: kubernetes.io/hostname #以hostname作为区分是否同个区域 " > qinhe-pod4.yamlkubectl apply -f qinhe-pod3.yaml kubectl apply -f qinhe-pod4.yaml kubectl get pods -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES qinhe3 1/1 Running 0 9s 10.10.179.11 ws-k8s-node1 <none> <none> qinhe4 1/1 Running 0 8s 10.10.234.70 ws-k8s-node2 <none> <none> pod4修改为topologyKey: user kubectl label nodes ws-k8s-node1 user=xhy kubectl label nodes ws-k8s-node2 user=xhy kubectl delete -f qinhe-pod4.yaml kubectl apply -f qinhe-pod4.yaml kubectl get pods -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES qinhe3 1/1 Running 0 9m59s 10.10.179.12 ws-k8s-node1 <none> <none> qinhe4 0/1 Pending 0 2s <none> <none> <none> <none> Warning FailedScheduling 74s default-scheduler 0/4 nodes are available: 2 node(s) didn't match pod anti-affinity rules, 2 node(s) had untolerated taint {node-role.kubernetes.io/control-plane: }. preemption: 0/4 nodes are available: 2 No preemption victims found for incoming pod, 2 Preemption is not helpful for scheduling.. #pod反亲和性的软亲和性与node亲和性的软亲和性同理 #清理环境 kubectl label nodes ws-k8s-node1 user- kubectl label nodes ws-k8s-node2 user- kubectl delete -f qinhe-pod3.yaml kubectl delete -f qinhe-pod4.yaml

污点与容忍度 污点类似于标签,可以给node打taints,在创建pod时可以通过tolerations来定义pod对于污点的容忍度

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 kubectl describe node ws-k8s-master1 | grep -i taint Taints: node-role.kubernetes.io/control-plane:NoSchedule kubectl describe node ws-k8s-node1 | grep -i taint Taints: <none> kubectl explain nodes.spec.taints.effect 1. NoExecute 对已调度的pod不影响,仅对新需要调度的pod进行影响 2. NoSchedule 对已调度和新调度的pod都会有影响 3. PreferNoSchedule 软性的NoSchedule,就算不满足条件也可以调度到不容忍的node上 kubectl get pods -n kube-system -owide kubectl describe pods kube-proxy-bg7ck -n kube-system | grep -i tolerations -A 10 Tolerations: op=Exists node.kubernetes.io/disk-pressure:NoSchedule op=Exists node.kubernetes.io/memory-pressure:NoSchedule op=Exists node.kubernetes.io/network-unavailable:NoSchedule op=Exists node.kubernetes.io/not-ready:NoExecute op=Exists node.kubernetes.io/pid-pressure:NoSchedule op=Exists node.kubernetes.io/unreachable:NoExecute op=Exists node.kubernetes.io/unschedulable:NoSchedule op=Exists Events: <none> kubectl taint node ws-k8s-node1 user=ws:NoSchedule cat > wudian.yaml << EOF apiVersion: v1 kind: Pod metadata: name: wudain-pod namespace: default labels: app: app1 spec: containers: - name: wudian-pod image: docker.io/library/tomcat imagePullPolicy: IfNotPresent EOF kubectl apply -f wudian.yaml kubectl get pods -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES wudain-pod 1 /1 Running 0 18s 10.10 .234 .72 ws-k8s-node2 <none> <none> kubectl taint node ws-k8s-node2 user=xhy:NoExecute kubectl get pods -owide No resources found in default namespace. kubectl apply -f wudian.yaml kubectl get pods -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES wudain-pod 0 /1 Pending 0 3s <none> <none> <none> <none> kubectl describe node ws-k8s-node1 | grep -i taint Taints: user=ws:NoSchedule kubectl describe node ws-k8s-node2 | grep -i taint Taints: user=xhy:NoExecute cat > wudian2.yaml << EOF apiVersion: v1 kind: Pod metadata: name: wudain2-pod namespace: default labels: app: app1 spec: containers: - name: wudian2-pod image: docker.io/library/tomcat imagePullPolicy: IfNotPresent tolerations: - key: "user" operator: "Equal" value: "ws" effect: "NoSchedule" EOF kubectl apply -f wudian2.yaml kubectl get pods -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES wudain-pod 0 /1 Pending 0 21m <none> <none> <none> <none> wudain2-pod 1 /1 Running 0 15s 10.10 .179 .13 ws-k8s-node1 <none> <none> cat > wudian3.yaml << EOF apiVersion: v1 kind: Pod metadata: name: wudain3-pod namespace: default labels: app: app1 spec: containers: - name: wudian3-pod image: docker.io/library/tomcat imagePullPolicy: IfNotPresent tolerations: - key: "user" operator: "Exists" value: "" effect: "NoExecute" tolerationSeconds: 1800 EOF kubectl apply -f wudian3.yaml kubectl get pods -owide | grep -i node2 wudain3-pod 1 /1 Running 0 59s 10.10 .234 .73 ws-k8s-node2 <none> <none> kubectl delete -f wudian.yaml kubectl delete -f wudian2.yaml kubectl delete -f wudian3.yaml kubectl taint node ws-k8s-node1 user- kubectl taint node ws-k8s-node2 user-

k8s pod重启策略 pod状态与重启策略 参考文档:Pod 的生命周期 | Kubernetes

pod状态 1.pending——挂起

2.failed——失败

3.unknown——未知

4.Error——错误

5.succeeded——成功

6.Unschedulable

7.PodScheduled

8.Initialized

9.ImagePullBackOff

10.evicted

11.CrashLoopBackOff

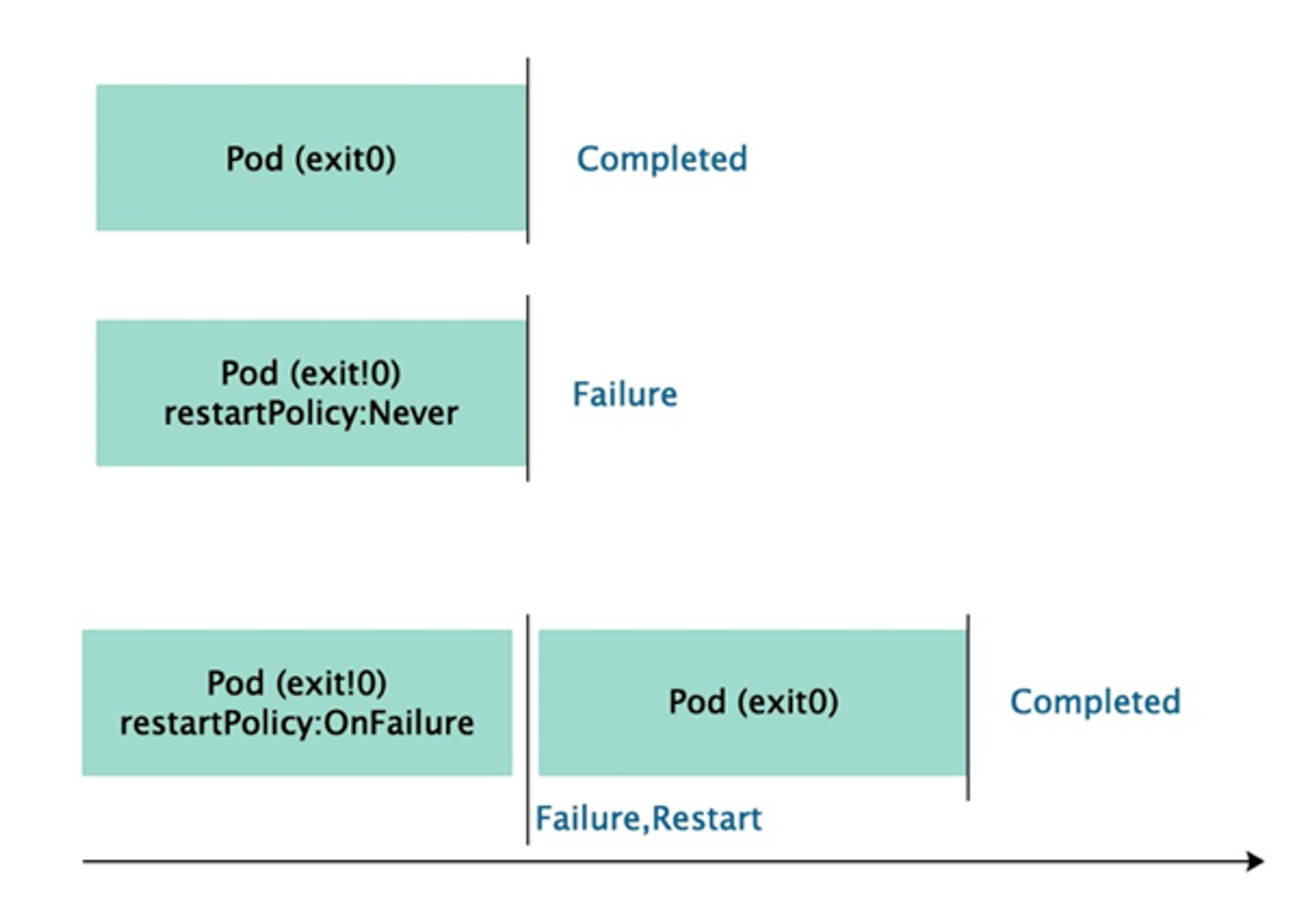

pod重启策略 当容器异常时,可以通过设置RestartPolicy字段,设置pod重启策略来对pod进行重启等操作

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 kubectl explain pod.spec.restartPolicy KIND: Pod VERSION: v1 FIELD: restartPolicy <string> DESCRIPTION: Restart policy for all containers within the pod. One of Always, OnFailure, Never. Default to Always. More info: https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/#restart-policy Possible enum values: - `"Always"` - `"Never"` - `"OnFailure"`#容器错误退出,即退出码不为0时,则自动重启 cat > always.yaml << EOF apiVersion: v1 kind: Pod metadata: name: always-pod namespace: default spec: restartPolicy: Always containers: - name: test-pod image: docker.io/library/tomcat imagePullPolicy: IfNotPresent EOF kubectl apply -f always.yaml kubectl get po NAME READY STATUS RESTARTS AGE always-pod 1 /1 Running 0 22s kubectl exec -it always-pod -- /bin/bash shutdown.sh kubectl get po NAME READY STATUS RESTARTS AGE always-pod 1 /1 Running 1 (5s ago) 70s cat > never.yaml << EOF apiVersion: v1 kind: Pod metadata: name: never-pod namespace: default spec: restartPolicy: Never containers: - name: test-pod image: docker.io/library/tomcat imagePullPolicy: IfNotPresent EOF kubectl apply -f never.yaml kubectl exec -it never-pod -- /bin/bash shutdown.sh kubectl get pods | grep never never-pod 0 /1 Completed 0 73s cat > onfailure.yaml << EOF apiVersion: v1 kind: Pod metadata: name: onfailure-pod namespace: default spec: restartPolicy: OnFailure containers: - name: test-pod image: docker.io/library/tomcat imagePullPolicy: IfNotPresent EOF kubectl apply -f onfailure.yaml kubectl exec -it onfailure-pod -- /bin/bash kill 1 kubectl get po | grep onfailure onfailure-pod 1 /1 Running 1 (43s ago) 2m11s kubectl exec -it onfailure-pod -- /bin/bash shutdown.sh kubectl get po | grep onfailure onfailure-pod 0 /1 Completed 1 3m58s kubectl delete -f always.yaml kubectl delete -f never.yaml kubectl delete -f onfailure.yaml

pod生命周期——容器钩子与容器探测 参考资料

Pod 的生命周期 | Kubernetes

Init 容器 | Kubernetes

Pod的生命周期可以分为以下几个阶段:

Pending(等待):在这个阶段,Pod被创建,并且正在等待被调度到一个节点上运行。此时,Pod的容器镜像正在下载,网络和存储资源正在分配。

Running(运行中):一旦Pod成功调度到节点上,它进入Running状态。在此阶段,Pod中的容器开始在节点上运行,并且可以处理请求。

Succeeded(成功):如果Pod中的所有容器成功完成了它们的任务,并且退出状态码为0,那么Pod将进入Succeeded状态。一般情况下,这意味着Pod已经完成了它的工作。

Failed(失败):如果Pod中的任何容器以非零的退出状态码退出,或者其中一个容器无法启动,那么Pod将进入Failed状态。这表示Pod执行出现了问题。

Unknown(未知):如果无法获取Pod的状态信息,或者与Pod关联的节点失去联系,那么Pod将进入Unknown状态。

除了这些基本的生命周期阶段,Pod还可以经历一些其他的状态转换,例如:

Terminating(终止中):当Pod被删除或终止时,它进入Terminating状态。在此阶段,Pod的容器正在停止,并且资源正在释放。

Evicted(驱逐):如果节点上的资源不足,Kubernetes可能会驱逐Pod,将其从节点上移除。这将导致Pod进入Evicted状态。

ContainerCreating(创建容器):当Pod的容器正在创建时,Pod将进入ContainerCreating状态。这通常发生在调度期间,当容器镜像正在下载或容器正在启动时。

这些状态和状态转换代表了Pod在其生命周期中可能经历的不同阶段和情况。Kubernetes通过监控和管理Pod的状态来确保Pod的正常运行和可靠性。

Pod生命周期一般包含以下几个流程:

1、创建pause容器

2、创建初始化容器

初始化容器是串行运行的,一个初始化容器运行成功才能运行下一个初始化容器,全部执行完才能执行主容器,并且初始化容器内的数据可以被主容器用到。

初始化容器不支持pod就绪探测,因为初始化容器在pod就绪之前就已经完成

如果初始化容器运行失败,k8s也会根据重启策略restartPolicy决定是否进行重启

3、主容器

4、前置钩子/容器停止前钩子(PreStop Hook)

5、后置钩子/容器启动后钩子(PostStart Hook)

初始化容器 参考资料Init Containers | Kubernetes

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 kubectl explain pod.spec.initContainers cat > init1.yaml << EOF apiVersion: v1 kind: Pod metadata: name: init1 namespace: default spec: initContainers: - name: init1 image: docker.io/library/nginx imagePullPolicy: IfNotPresent command: ["echo" ,"the first test" ] - name: init2 image: docker.io/library/nginx imagePullPolicy: IfNotPresent command: ["/bin/bash" ,"-c" ,"echo 'the secend test'" ] containers: - name: test image: docker.io/library/nginx imagePullPolicy: IfNotPresent EOF kubectl apply -f init1.yaml kubectl get pods -w NAME READY STATUS RESTARTS AGE init1 0 /1 Pending 0 0s init1 0 /1 Pending 0 0s init1 0 /1 Init:0/2 0 0s init1 0 /1 Init:0/2 0 1s init1 0 /1 Init:1/2 0 2s init1 0 /1 PodInitializing 0 3s init1 1 /1 Running 0 4s

容器钩子 参考文档 https://kubernetes.io/docs/concepts/containers/container-lifecycle-hooks/#container-hooks

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 容器启动后钩子(PostStart 容器停止前钩子(PreStop kubectl explain pods.spec.containers.lifecycle postStart <Object> preStop <Object> kubectl explain pods.spec.containers.lifecycle.postStart exec <Object> httpGet <Object> tcpSocket <Object> cat > hook.yaml << EOF apiVersion: v1 kind: Pod metadata: name: hook namespace: default spec: containers: - name: test image: docker.io/library/nginx imagePullPolicy: IfNotPresent lifecycle: postStart: exec: command: ["/bin/sh" , "-c" , "echo 'test'" ] preStop: exec: command: ["/bin/sh" ,"-c" ,"pkill ssh" ] EOF kubectl apply -f hook.yaml get pods -w NAME READY STATUS RESTARTS AGE init1 1 /1 Running 0 57m hook 0 /1 Pending 0 0s hook 0 /1 Pending 0 0s hook 0 /1 ContainerCreating 0 0s hook 0 /1 ContainerCreating 0 0s hook 1 /1 Running 0 1s kubectl delete -f hook.yaml kubectl delete -f init1.yaml

容器探测 容器探测包括启动探测,就绪探测与存活探测

1、启动探测Startup Probe

用于检测容器内的应用程序是否仍在运行。

如果启动探测失败,则 Kubernetes 认为容器处于不健康状态,并尝试重新启动容器。

如果启动探测成功,则容器被认为是健康的,并继续正常运行。

常见的启动探测方式包括发送 HTTP 请求到容器的特定端点或执行命令并检查返回值。

2、就绪探测Readiness Probe

用于检测容器是否已经启动完成并准备好接收流量。

就绪探测与存活探测类似,但是 在容器启动期间进行检测,而不仅仅是容器启动后。

如果就绪探测失败,则 Kubernetes 认为容器尚未启动完成,将从服务负载均衡中剔除该容器。

如果就绪探测成功,则容器被认为已经启动完成并准备好接收流量。

常见的就绪探测方式与存活探测相似,包括发送 HTTP 请求或执行命令。

3、存活探测Liveness Probe

用于检测容器是否准备好接收流量。

如果存活探测失败,则 Kubernetes 认为容器尚未准备好处理流量,将从服务负载均衡中剔除该容器。

如果存活探测成功,则容器被认为是准备好接收流量的,并加入到服务负载均衡中。

常见的存活探测方式包括发送 HTTP 请求到容器的特定端点或执行命令并检查返回值。

存活探测与就绪探测的区别:

k8s中启动探测会最先进行,就绪探测和存活探测会同时进行

参考资料:配置存活、就绪和启动探针 | Kubernetes

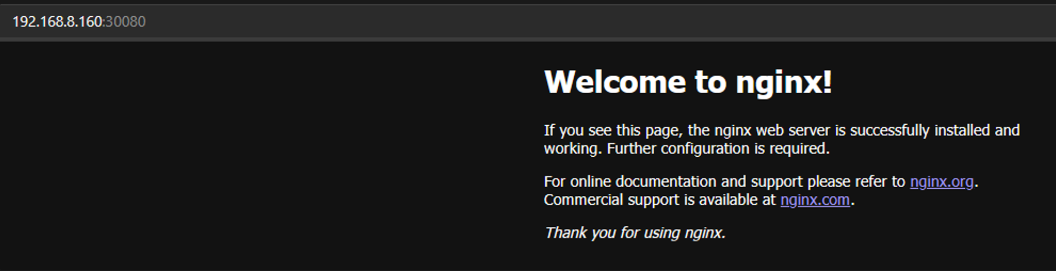

启动探测 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 kubectl explain pod.spec.containers.startupProbe exec <Object> failureThreshold <integer> grpc <Object> httpGet <Object> initialDelaySeconds <integer> periodSeconds <integer> successThreshold <integer> tcpSocket <Object> terminationGracePeriodSeconds <integer> timeoutSeconds <integer> cat > qidongtance-command.yaml << EOF apiVersion: v1 kind: Pod metadata: name: qidong namespace: default spec: containers: - name: test image: docker.io/library/nginx imagePullPolicy: IfNotPresent startupProbe: exec: command: - "/bin/sh" - "-c" - "echo '1'" initialDelaySeconds: 10 periodSeconds: 5 successThreshold: 1 failureThreshold: 3 timeoutSeconds: 5 EOF kubectl apply -f qidongtance-command.yaml kubectl get pods -w NAME READY STATUS RESTARTS AGE qidong 0 /1 Pending 0 0s qidong 0 /1 Pending 0 0s qidong 0 /1 ContainerCreating 0 0s qidong 0 /1 ContainerCreating 0 1s qidong 0 /1 Running 0 2s qidong 0 /1 Running 0 16s qidong 1 /1 Running 0 16s ... startupProbe: exec: command: - "/bin/sh" - "-c" - "qweasdaq" ... kubectl delete -f qidongtance-command.yaml kubectl apply -f qidongtance-command.yaml kubectl get pods -w NAME READY STATUS RESTARTS AGE qidong 1 /1 Running 0 78s qidong 1 /1 Terminating 0 5m6s qidong 1 /1 Terminating 0 5m6s qidong 0 /1 Terminating 0 5m6s qidong 0 /1 Terminating 0 5m6s qidong 0 /1 Terminating 0 5m6s qidong 0 /1 Pending 0 0s qidong 0 /1 Pending 0 0s qidong 0 /1 ContainerCreating 0 0s qidong 0 /1 ContainerCreating 0 1s qidong 0 /1 Running 0 1s qidong 0 /1 Running 1 (2s ago) 27s qidong 0 /1 Running 2 (2s ago) 47s qidong 0 /1 Running 2 (5s ago) 50s qidong 0 /1 Running 3 (1s ago) 66s qidong 0 /1 Running 4 (1s ago) 86s qidong 0 /1 CrashLoopBackOff 4 (1s ago) 106s kubectl delete -f qidongtance-command.yaml cat > qidongtance-tcp.yaml << EOF apiVersion: v1 kind: Pod metadata: name: qidong namespace: default spec: containers: - name: test image: docker.io/library/nginx imagePullPolicy: IfNotPresent ports: - containerPort: 80 startupProbe: tcpSocket: port: 80 initialDelaySeconds: 10 periodSeconds: 5 successThreshold: 1 failureThreshold: 3 timeoutSeconds: 5 EOF kubectl apply -f qidongtance-tcp.yaml kubectl get pods -w NAME READY STATUS RESTARTS AGE qidong 0 /1 Pending 0 0s qidong 0 /1 Pending 0 0s qidong 0 /1 ContainerCreating 0 0s qidong 0 /1 ContainerCreating 0 1s qidong 0 /1 Running 0 1s qidong 0 /1 Running 0 16s qidong 1 /1 Running 0 16s qidong 1 /1 Running 0 22s curl 10.10 .234 .91 :80 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> html { color-scheme: light dark; }body { width: 35em; margin: 0 auto; font-family: Tahoma , Verdana , Arial , sans-serif; }</style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html> kubectl delete -f qidongtance-tcp.yaml cat > qidongtance-httpget.yaml << EOF apiVersion: v1 kind: Pod metadata: name: qidong namespace: default spec: containers: - name: test image: docker.io/library/nginx imagePullPolicy: IfNotPresent ports: - containerPort: 80 startupProbe: httpGet: path: / port: 80 initialDelaySeconds: 10 periodSeconds: 5 successThreshold: 1 failureThreshold: 3 timeoutSeconds: 5 EOF kubectl apply -f qidongtance-httpget.yaml kubectl get pods -w NAME READY STATUS RESTARTS AGE qidong 0 /1 Pending 0 0s qidong 0 /1 Pending 0 0s qidong 0 /1 ContainerCreating 0 0s qidong 0 /1 ContainerCreating 0 1s qidong 0 /1 Running 0 2s qidong 0 /1 Running 0 16s qidong 1 /1 Running 0 16s curl 10.10 .234 .93 :80 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> html { color-scheme: light dark; }body { width: 35em; margin: 0 auto; font-family: Tahoma , Verdana , Arial , sans-serif; }</style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html> kubectl delete -f qidongtance-httpget.yaml

存活探测 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 kubectl explain pod.spec.containers.livenessProbe cat > cunhuotance-command.yaml << EOF apiVersion: v1 kind: Pod metadata: name: cunhuo namespace: default spec: containers: - name: test image: busybox:1.28 imagePullPolicy: IfNotPresent args: - "/bin/sh" - "-c" - "touch /1.txt; sleep 20; rm -rf /1.txt" livenessProbe: initialDelaySeconds: 10 periodSeconds: 5 exec: command: - cat - /1.txt EOF kubectl apply -f cunhuotance-command.yaml kubectl get pods -w NAME READY STATUS RESTARTS AGE cunhuo 1 /1 Running 0 8s cunhuo 0 /1 Completed 0 21s cunhuo 1 /1 Running 1 (2s ago) 22s cunhuo 0 /1 Completed 1 (23s ago) 43s cunhuo 0 /1 CrashLoopBackOff 1 (4s ago) 45s cunhuo 1 /1 Running 2 (15s ago) 56s kubectl get pods -w NAME READY STATUS RESTARTS AGE cunhuo 0 /1 CrashLoopBackOff 2 (12s ago) 88s cunhuo 1 /1 Running 3 (32s ago) 108s cunhuo 0 /1 Completed 3 (52s ago) 2m8s cunhuo 0 /1 CrashLoopBackOff 3 (2s ago) 2m10s cunhuo 0 /1 CrashLoopBackOff 3 (26s ago) 2m34s kubectl delete -f cunhuotance-command.yaml

就绪探测 就绪探测主要用于与pod与service相对接的场景下进行使用

探测pod内接口,探测成功则代表程序启动,就开放对外的接口访问,如果探测失败,则暂时不开放接口访问,直到探测成功

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 apiVersion: v1 kind: Service metadata: name: java spec: type: NodePort ports: - name: yewu port: 8080 targetPort: 8080 nodePort: 31180 - name: guanli port: 8081 targetPort: 8081 nodePort: 31181 selector: app: java --- apiVersion: v1 kind: Pod metadata: name: java spec: containers: - name: java labels: app: java image: xxxxx imagePullPolicy: IfNotPresent ports: - name: yewu containerPort: 8080 - name: guanli containerPort: 8081 readinessProbe: initialDelaySeconds: 10 periodSeconds: 5 timeoutSeconds: 5 httpGet: scheme: HTTP port: 8081 path: xxx

k8s控制器ReplicaSet与Deployment 控制器:

在Kubernetes(简称K8s)中,控制器是负责管理和维护集群中资源状态的组件。控制器监视集群中的对象,并根据它们的预期状态来采取行动,以确保系统的期望状态与实际状态保持一致。

对于自主式pod来说,删除pod之后pod就直接消失了,如果因为一些误操作或pod错误退出,就不会自动恢复,这个时候就需要使用k8s的控制器,使用控制器创建的pod可以进行故障的恢复与自愈,并且也可以做资源调度、配置管理等内容

ReplicaSet ReplicaSet是Kubernetes中的一种控制器,用于确保一组Pod副本的运行。它定义了所需的Pod副本数量,并监控它们的运行状态,以确保始终有指定数量的副本在运行。

用的不多,大多数环境中使用deployment资源,deployment的功能包括ReplicaSet

定义ReplicaSet时,需要定义要创建的pod的模板,相当于pod做了多份的负载均衡

以下是一个replicatest的示例文件

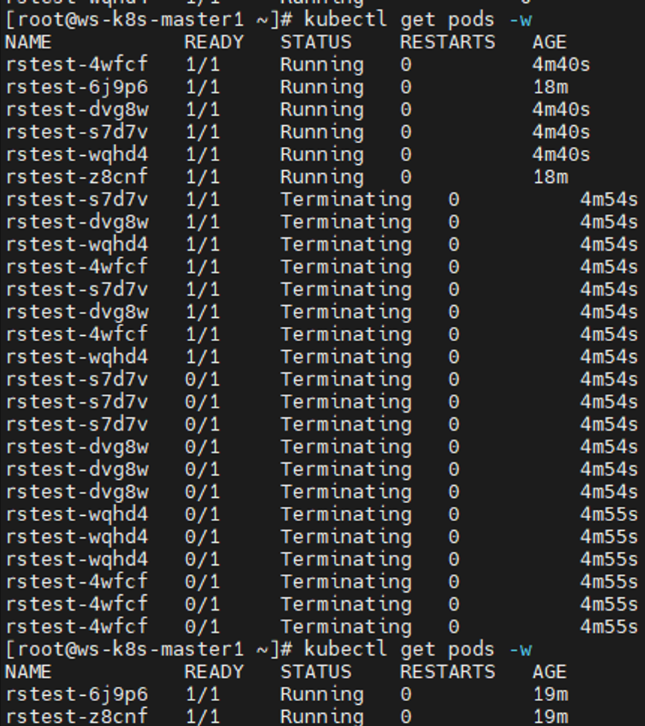

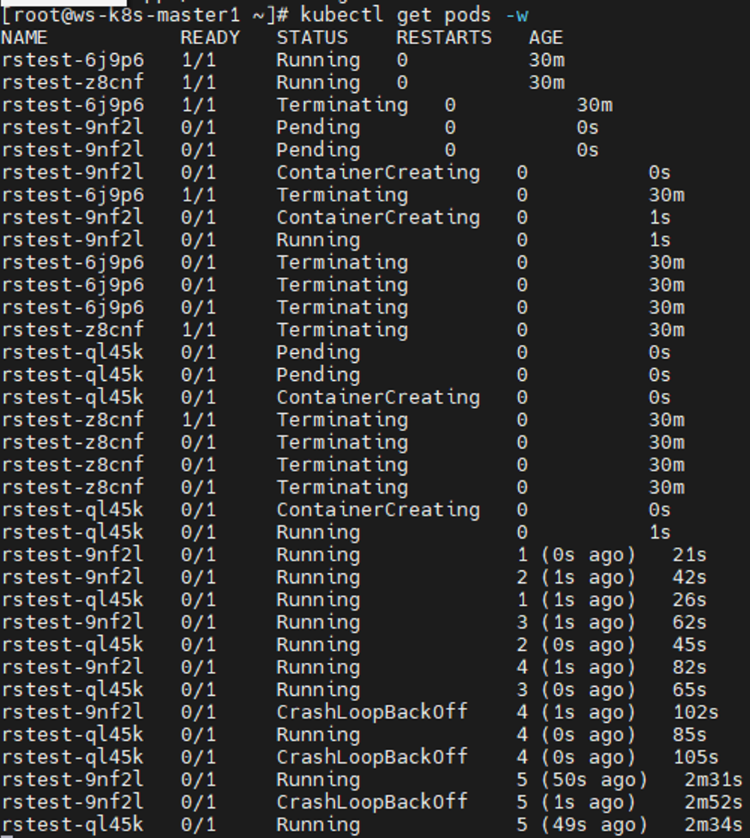

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 kubectl explain rs apiVersion <string> kind <string> metadata <Object> spec <Object> status <Object> kubectl explain rs.spec minReadySeconds replicas selector template kubectl explain rs.spec.template.spec cat > rs.yaml << EOF apiVersion: apps/v1 kind: ReplicaSet metadata: name: rstest namespace: default spec: replicas: 5 selector: matchLabels: user: ws template: metadata: labels: user: ws spec: containers: - name: test1 image: docker.io/library/nginx imagePullPolicy: IfNotPresent ports: - containerPort: 80 startupProbe: periodSeconds: 5 initialDelaySeconds: 10 timeoutSeconds: 5 httpGet: scheme: HTTP port: 80 path: / EOF kubectl apply -f rs.yaml kubectl get pods -w | grep Running rstest-2qbrw 1 /1 Running 0 2m34s rstest-6j9p6 1 /1 Running 0 2m34s rstest-ltpn5 1 /1 Running 0 2m34s rstest-z7h27 1 /1 Running 0 2m34s rstest-z8cnf 1 /1 Running 0 2m34s kubectl get rs NAME DESIRED CURRENT READY AGE rstest 5 5 5 2m56s、 kubectl delete pods rstest-hrvtj kubectl get pods -w | grep Running rstest-6j9p6 1 /1 Running 0 6m41s rstest-hrvtj 1 /1 Running 0 32s rstest-ltpn5 1 /1 Running 0 6m41s rstest-z7h27 1 /1 Running 0 6m41s rstest-z8cnf 1 /1 Running 0 6m41s rstest-rmxcq 0 /1 Running 0 1s rstest-rmxcq 0 /1 Running 0 10s rstest-rmxcq 1 /1 Running 0 10s ... replicas: 6 ... kubectl apply -f rs.yaml kubectl get pods -w | grep Running rstest-6j9p6 1 /1 Running 0 12m rstest-ltpn5 1 /1 Running 0 12m rstest-rmxcq 1 /1 Running 0 5m29s rstest-z7h27 1 /1 Running 0 12m rstest-z8cnf 1 /1 Running 0 12m rstest-zwgnl 0 /1 Running 0 1s rstest-zwgnl 0 /1 Running 0 10s rstest-zwgnl 1 /1 Running 0 10s ... replicas: 2 ... kubectl apply -f rs.yaml

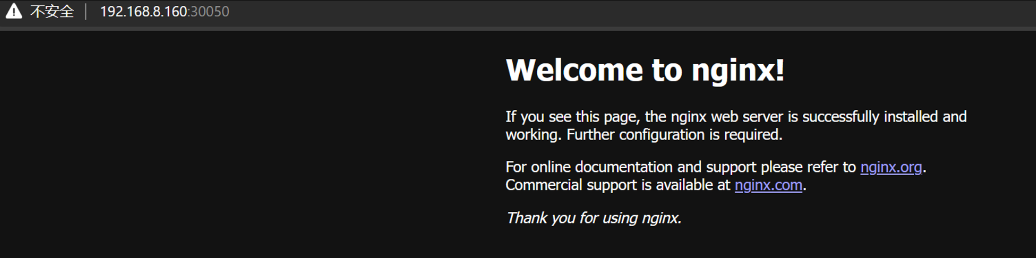

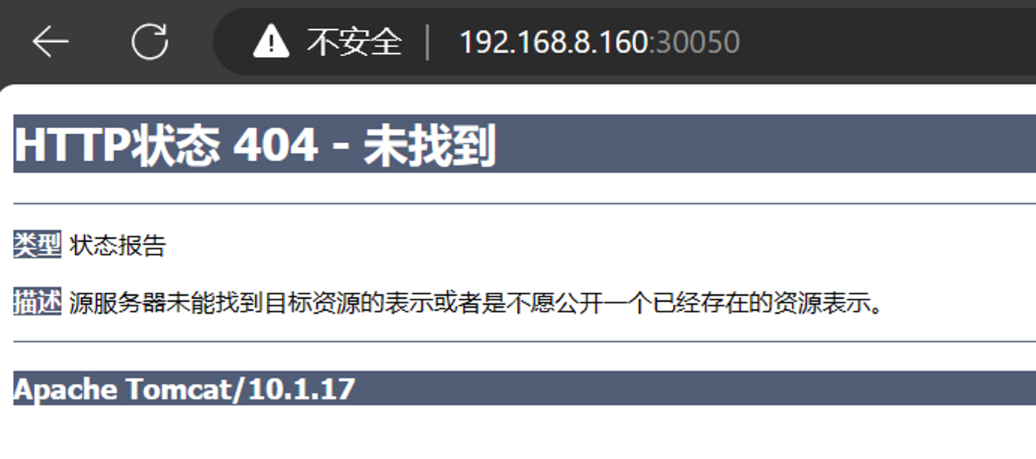

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 curl 10.10 .179 .34 :80 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> html { color-scheme: light dark; }body { width: 35em; margin: 0 auto; font-family: Tahoma , Verdana , Arial , sans-serif; }</style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html> ... image: docker.io/library/tomcat ... kubectl apply -f rs.yaml kubectl delete pods rstest-6j9p6 kubectl delete pods rstest-z8cnf kubectl get pods -w NAME READY STATUS RESTARTS AGE rstest-c2m98 1 /1 Running 0 3m54s rstest-xkqnl 1 /1 Running 0 3m54s curl 10.10 .234 .124 :8080 <!doctype html><html lang="en"><head><title>HTTP Status 404 – Not Found</title><style type="text/css">body {font-family:Tahoma ,Arial ,sans-serif; } h1, h2, h3, b {color:white;background-color:#525D76; } h1 {font-size:22px; } h2 {font-size:16px; } h3 {font-size:14px; } p {font-size:12px; } a {color:black; } .line {height:1px;background-color:#525D76;border:none; }</style></head><body><h1>HTTP Status 404 – Not Found</h1><hr class="line" /><p><b>Type</b> Status Report</p><p><b>Description</b> The origin server did not find a current representation for the target resource or is not willing to disclose that one exists.</p><hr class="line" /><h3>Apache Tomcat/10.1.17</h3></body></html>[ kubectl delete -f rs.yaml

Deployment Deployment是Kubernetes中的一个重要组件,用于管理应用程序的部署和更新。它提供了一种声明性的方式来定义应用程序的期望状态,并确保集群中的Pod按照这个状态进行部署和维护。

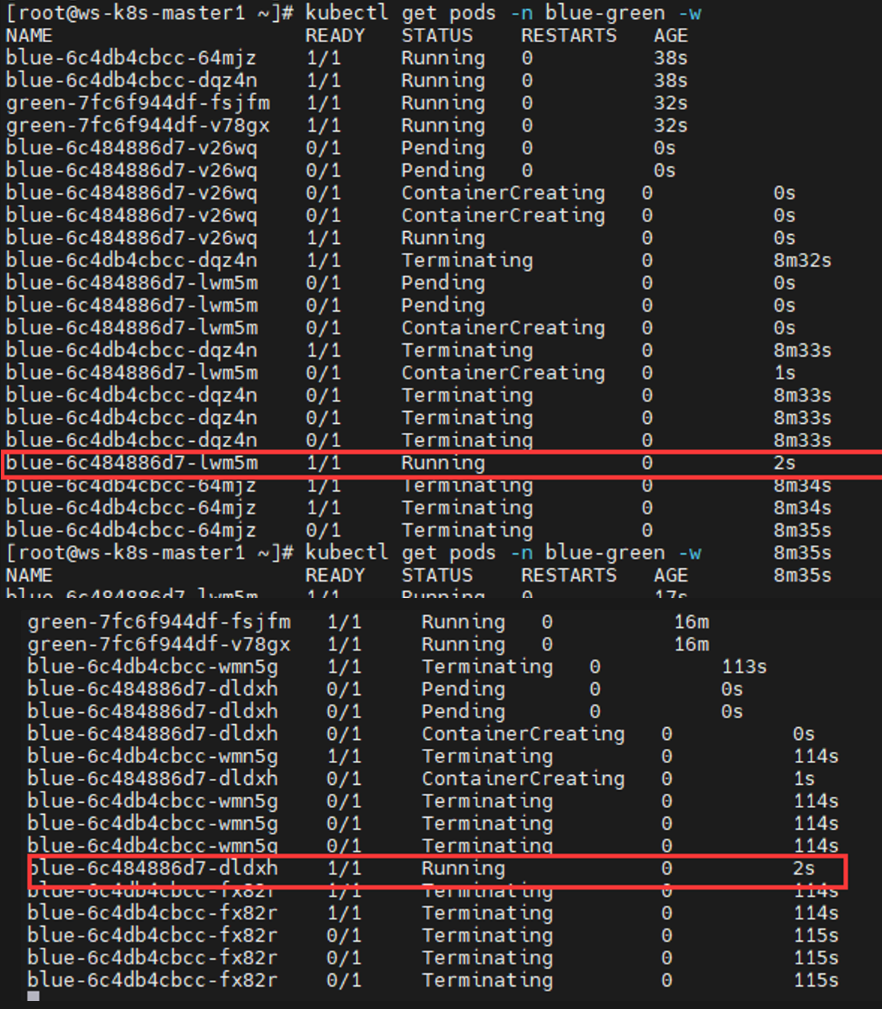

Deployment可以管理多个rs,进行滚动更新时,会使用新的rs,只同时使用一个rs。并且Deployment支持多种更新策略

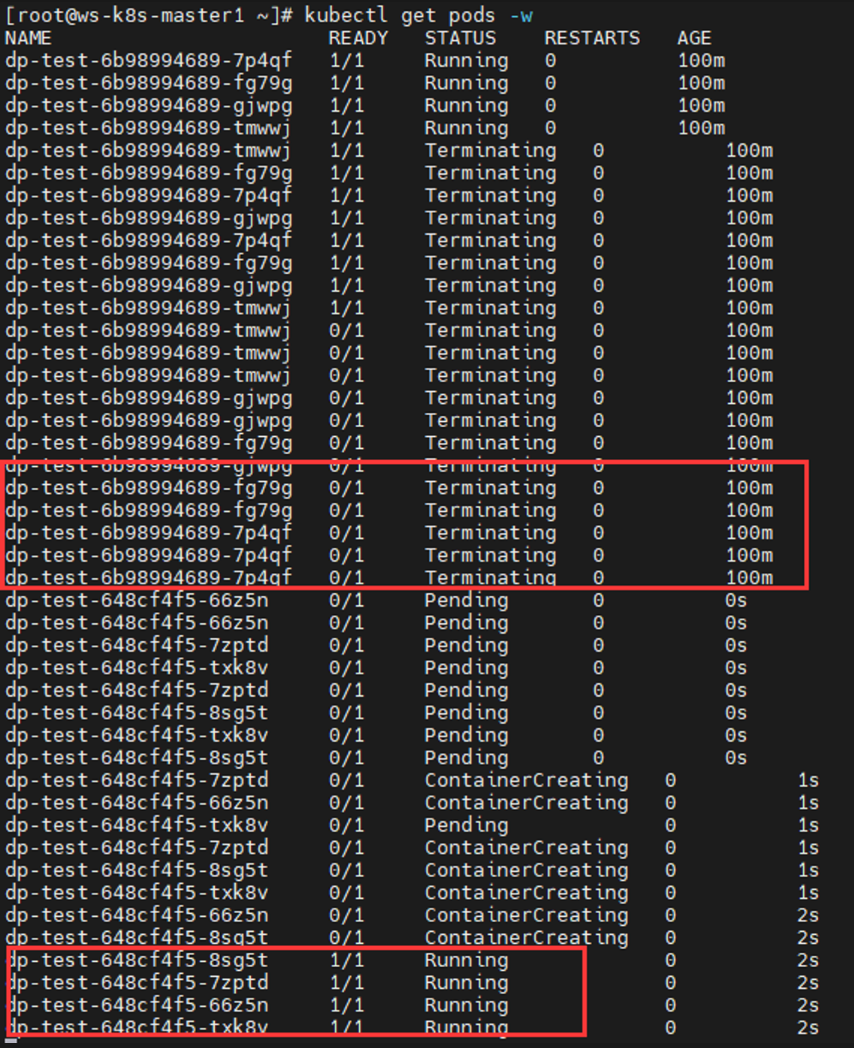

yaml文件编写 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 kubectl explain Deployment apiVersion <string> kind <string> metadata <Object> spec <Object> status <Object> kubectl explain Deployment.spec minReadySeconds <integer> paused <boolean> progressDeadlineSeconds <integer> replicas <integer> revisionHistoryLimit <integer> selector <Object> -required- strategy <Object> template <Object> -required- kubectl explain Deployment.spec.strategy rollingUpdate <Object> type <string> Possible enum values: - `"Recreate"` Kill all existing pods before creating new ones. - `"RollingUpdate"` Replace the old ReplicaSets by new one using rolling update i.e gradually scale down the old ReplicaSets and scale up the new one. kubectl explain Deployment.spec.strategy.rollingUpdate maxSurge <string> maxUnavailable <string> kubectl explain Deployment.spec.template metadata <Object> spec <Object> cat > dp.yaml << EOF apiVersion: apps/v1 kind: Deployment metadata: name: dp-test namespace: default labels: app: tomcat spec: replicas: 3 revisionHistoryLimit: 5 selector: matchLabels: app: test template: metadata: name: demo labels: app: test spec: containers: - name: dp1 image: docker.io/library/nginx imagePullPolicy: IfNotPresent ports: - containerPort: 80 EOF kubectl apply -f dp.yaml kubectl get deploy NAME READY UP-TO-DATE AVAILABLE AGE dp-test 3 /3 3 3 69s kubectl get rs NAME DESIRED CURRENT READY AGE dp-test-648cf4f5 3 3 3 114s kubectl get pods NAME READY STATUS RESTARTS AGE dp-test-648cf4f5-hbhmx 1 /1 Running 0 2m7s dp-test-648cf4f5-x9gb4 1 /1 Running 0 2m7s dp-test-648cf4f5-znktp 1 /1 Running 0 2m7s

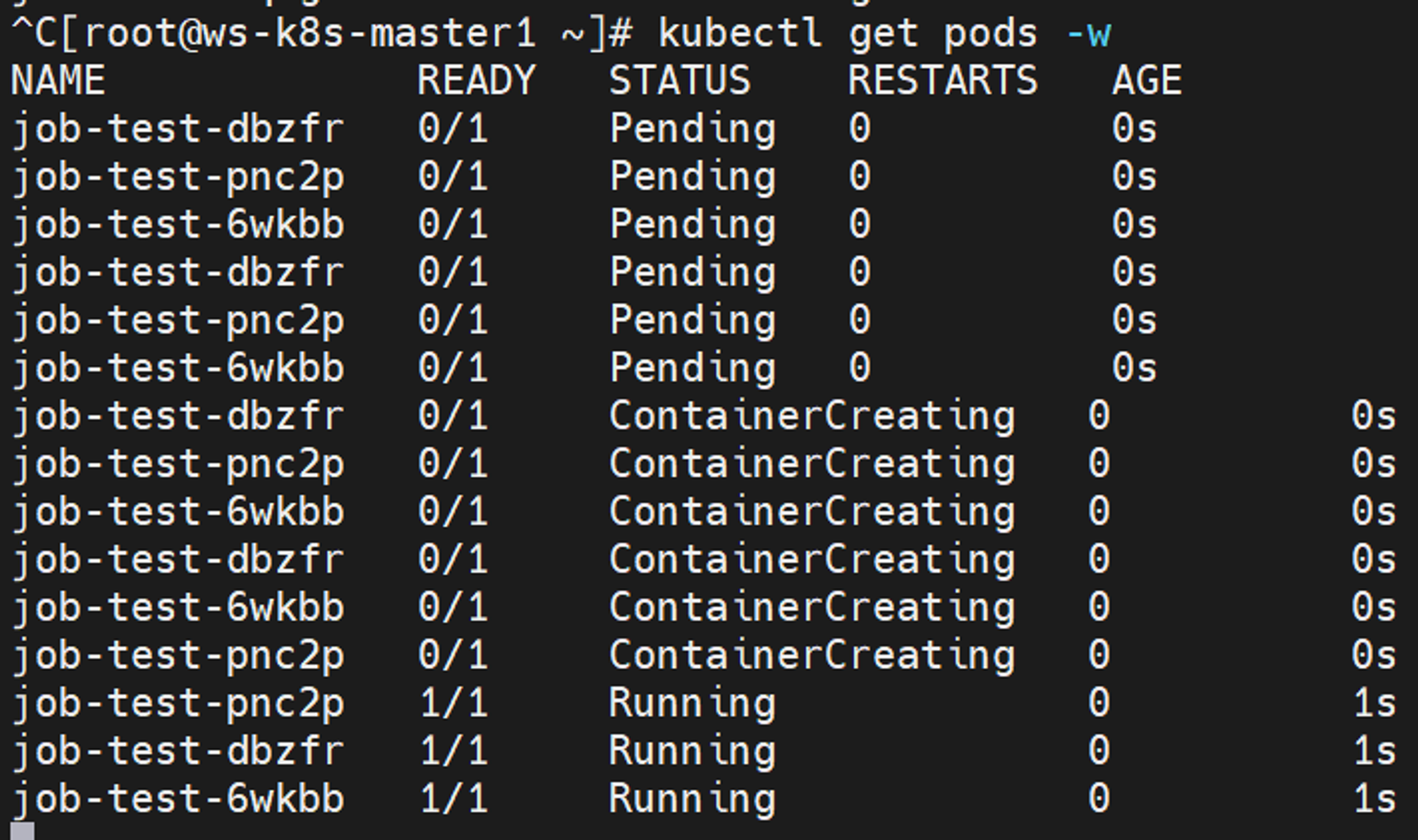

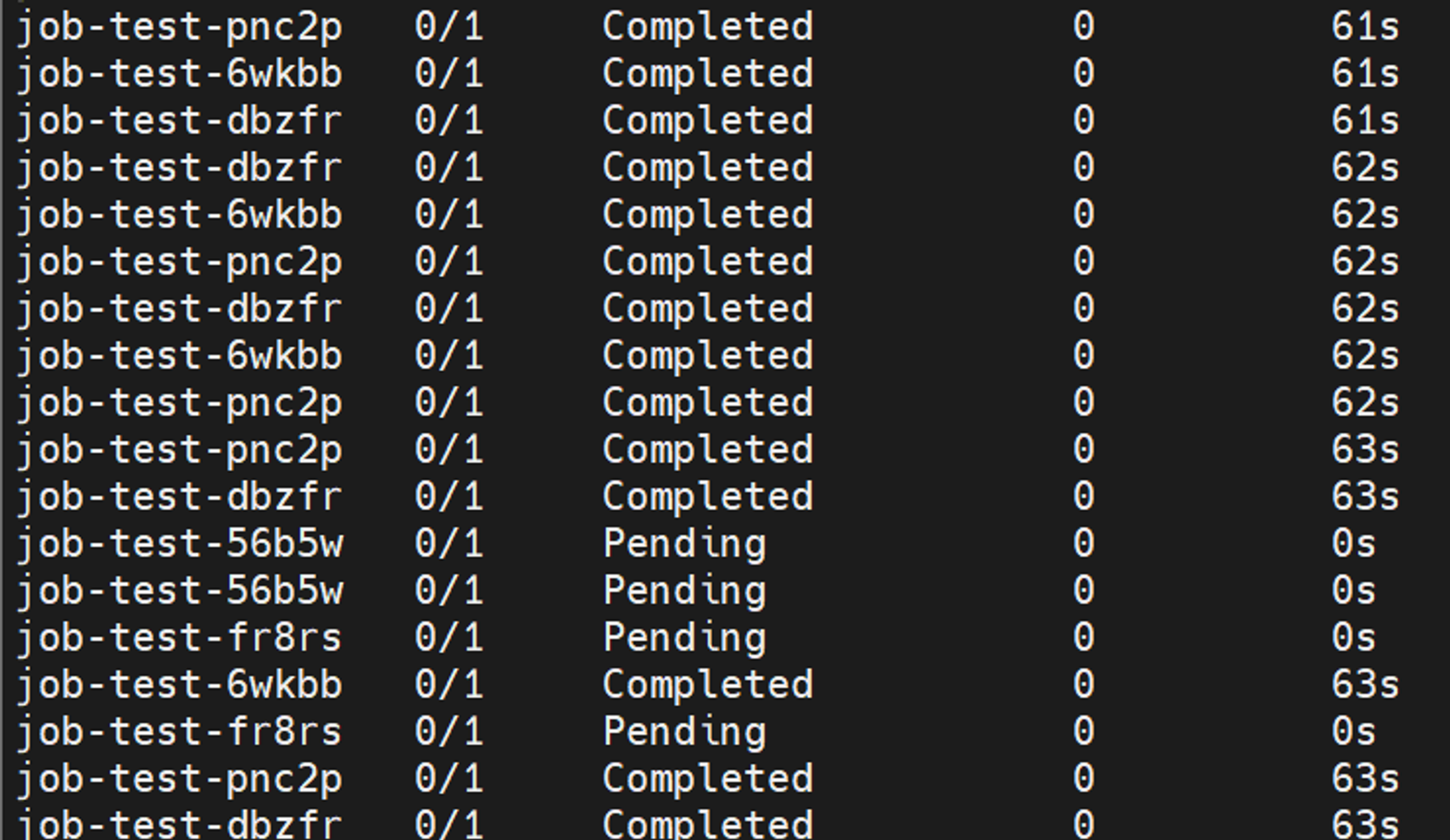

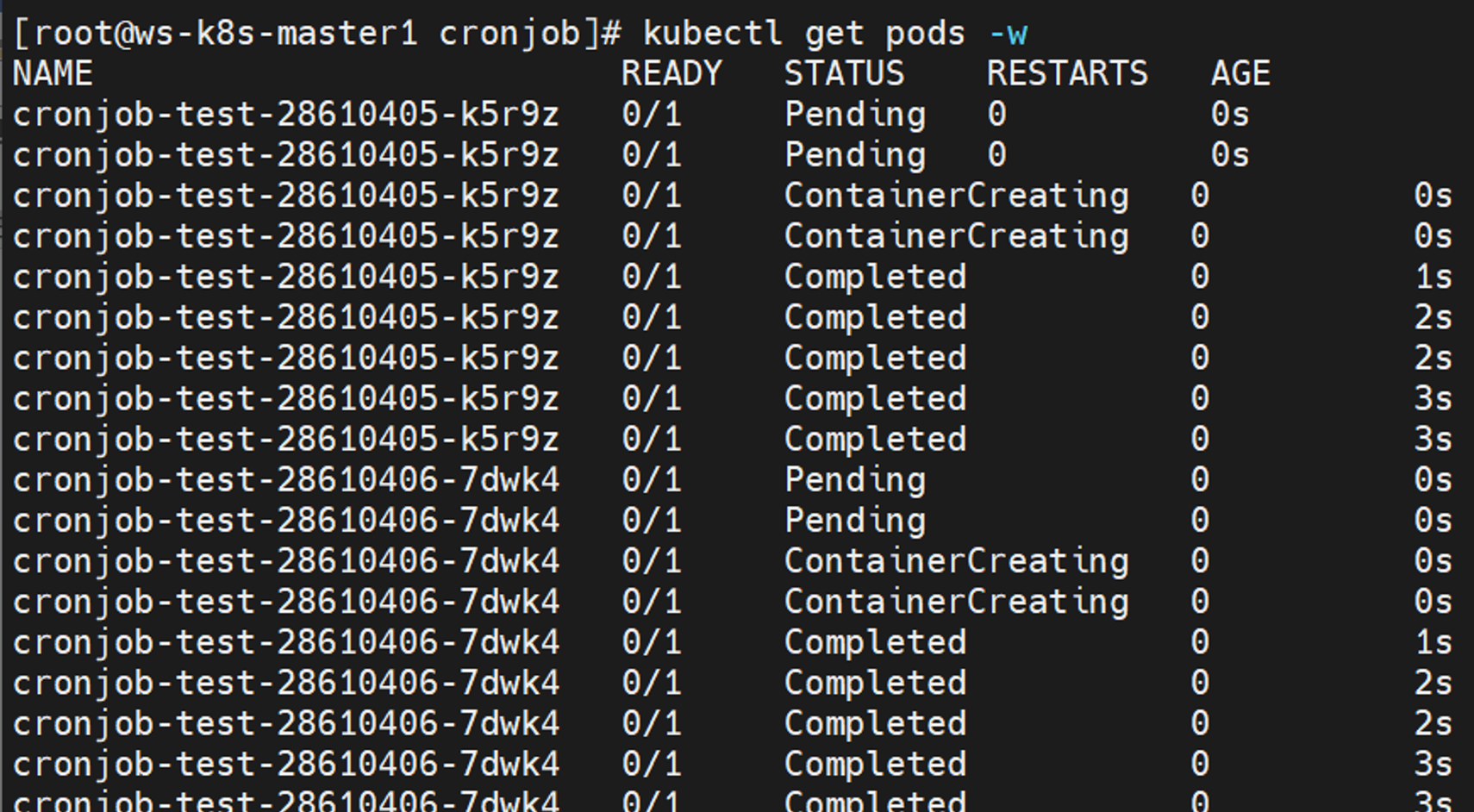

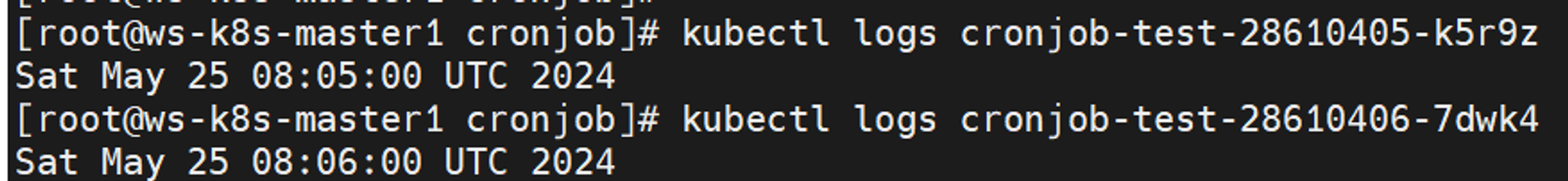

扩容与缩容 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 ... replicas: 5 ... kubectl apply -f dp.yaml kubectl get deploy NAME READY UP-TO-DATE AVAILABLE AGE dp-test 5 /5 5 5 13m kubectl get pods NAME READY STATUS RESTARTS AGE dp-test-648cf4f5-b82kv 1 /1 Running 0 3m29s dp-test-648cf4f5-dssv7 1 /1 Running 0 3m29s dp-test-648cf4f5-hbhmx 1 /1 Running 0 13m dp-test-648cf4f5-x9gb4 1 /1 Running 0 13m dp-test-648cf4f5-znktp 1 /1 Running 0 13m ... replicas: 2 ... kubectl apply -f dp.yaml kubectl get deploy NAME READY UP-TO-DATE AVAILABLE AGE dp-test 2 /2 2 2 14m